Merging pretrained large language models helps data scientists and AI researchers create more powerful and specialized models. To combine multiple language models efficiently and flexibly, use mergekit.

Here are the steps to use mergekit:

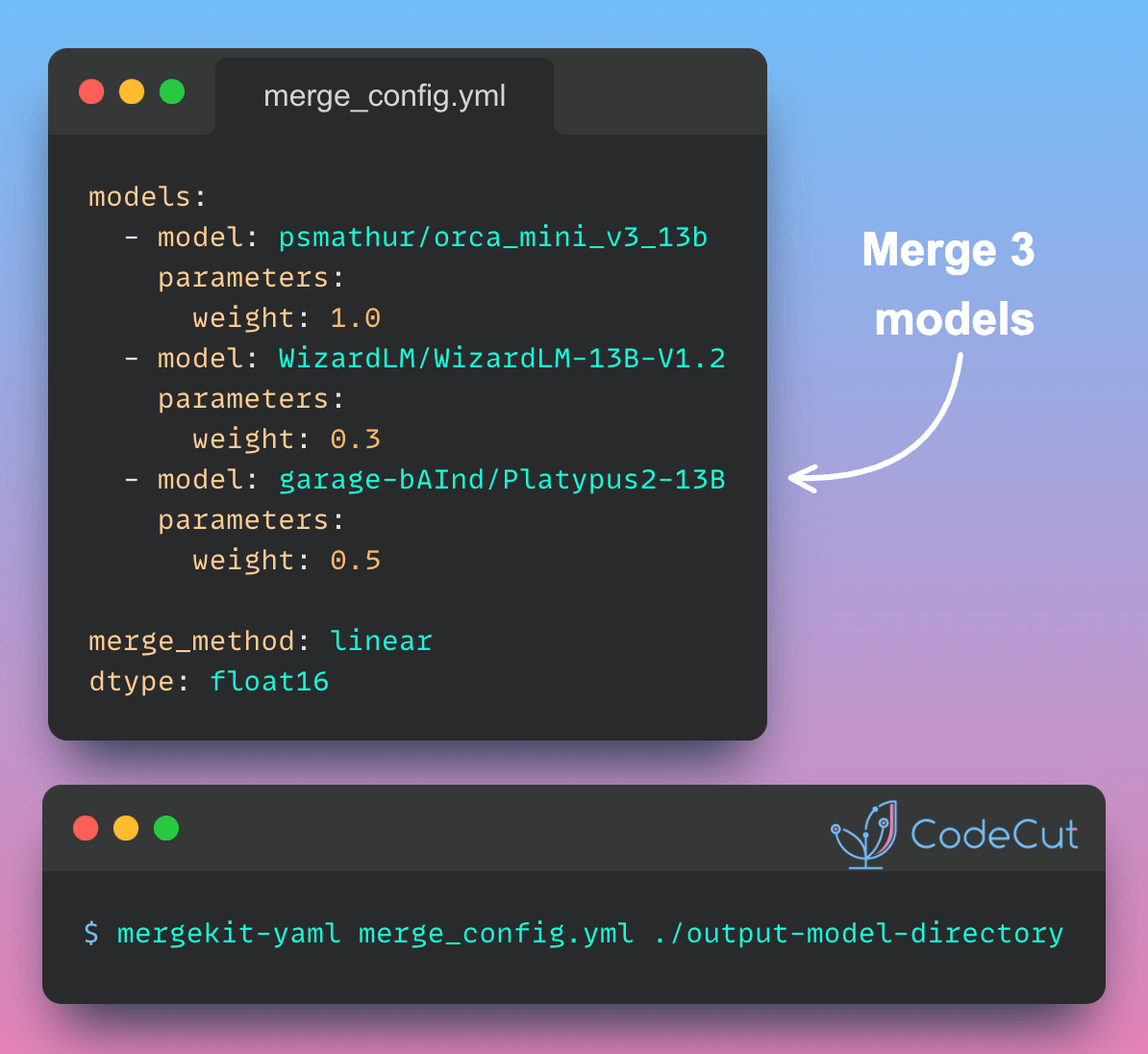

Create a YAML configuration file (e.g., merge_config.yml) specifying your merge details:

models:

- model: psmathur/orca_mini_v3_13b

parameters:

weight: 1.0

- model: WizardLM/WizardLM-13B-V1.2

parameters:

weight: 0.3

- model: garage-bAInd/Platypus2-13B

parameters:

weight: 0.5

merge_method: linear

dtype: float16This YAML configuration describes a linear merge of three models:

- Orca Mini v3 13B (weight 1.0)

- WizardLM 13B V1.2 (weight 0.3)

- Platypus2 13B (weight 0.5)

The merge will use a weighted average, with Orca Mini having the strongest influence. The data type for the merge operation is 16-bit floating point numbers.

Next, run the merge:

mergekit-yaml merge_config.yml ./output-model-directoryThe merged model will be saved in ./output-model-directory.

mergekit is particularly useful for researchers who want to create custom models tailored to specific tasks or domains by leveraging the strengths of multiple pre-trained models.