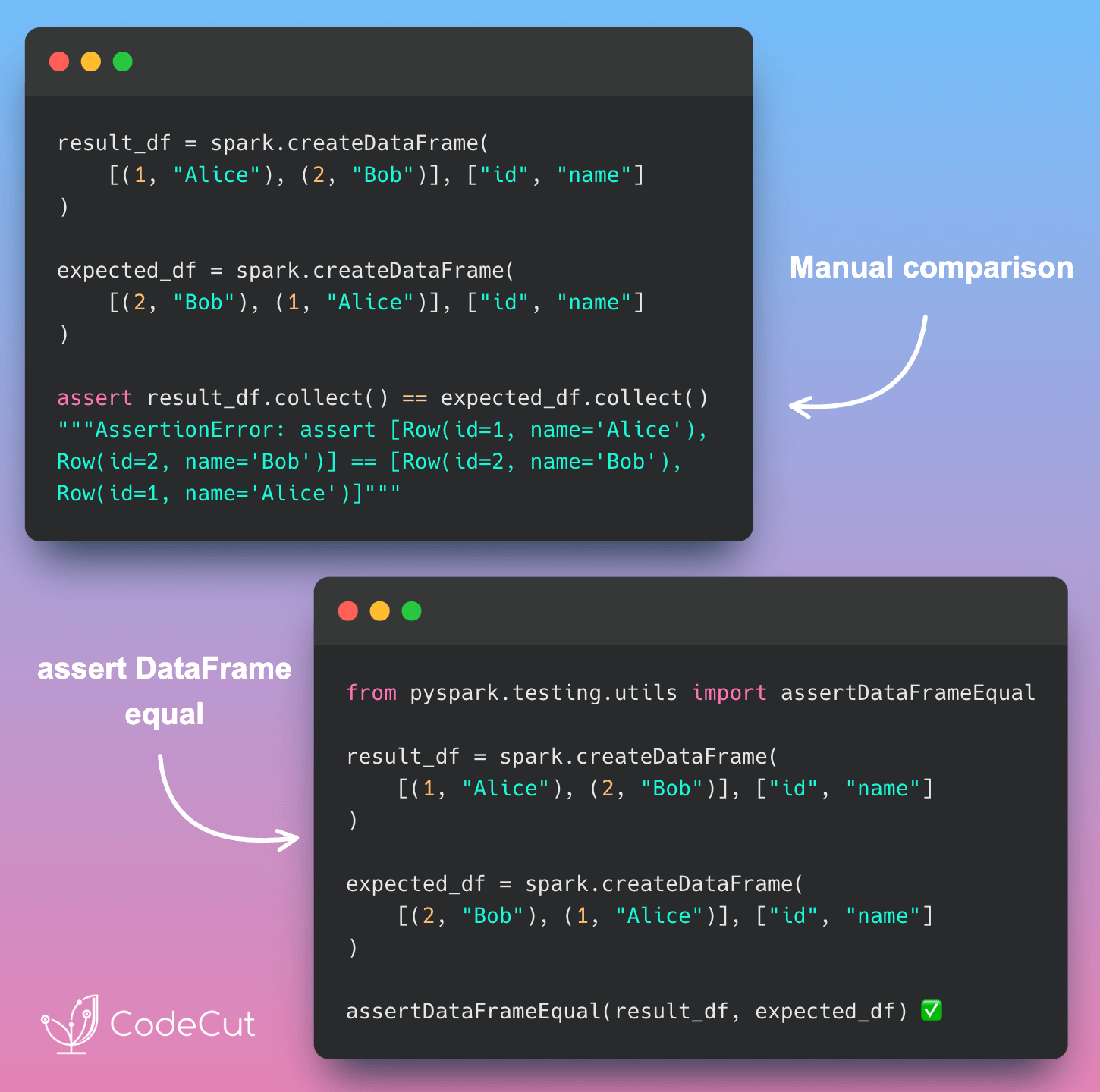

When testing PySpark code, it’s essential to verify that the output DataFrames match our expectations. However, manually comparing DataFrame outputs using collect() and equality comparison can lead to brittle tests and unclear error messages.

Let’s consider an example where we create two DataFrames, result_df and expected_df, with the same data but in a different order.

# Manual DataFrame comparison

result_df = spark.createDataFrame(

[(1, "Alice", 100), (2, "Bob", 200)], ["id", "name", "value"]

)

expected_df = spark.createDataFrame(

[(2, "Bob", 200), (1, "Alice", 100)], ["id", "name", "value"]

)

assert result_df.collect() == expected_df.collect()Running this test will fail due to the ordering issue, resulting in an unclear error message:

AssertionError: assert [Row(id=1, name='Alice', value=100), Row(id=2, name='Bob', value=200)] == [Row(id=2, name='Bob', value=200), Row(id=1, name='Alice', value=100)]

+ where [Row(id=1, name='Alice', value=100), Row(id=2, name='Bob', value=200)] = <bound method DataFrame.collect of DataFrame[id: bigint, name: string, value: bigint]>()

+ where <bound method DataFrame.collect of DataFrame[id: bigint, name: string, value: bigint]> = DataFrame[id: bigint, name: string, value: bigint].collect

+ and [Row(id=2, name='Bob', value=200), Row(id=1, name='Alice', value=100)] = <bound method DataFrame.collect of DataFrame[id: bigint, name: string, value: bigint]>()

+ where <bound method DataFrame.collect of DataFrame[id: bigint, name: string, value: bigint]> = DataFrame[id: bigint, name: string, value: bigint].collectFortunately, PySpark provides a more robust way to compare DataFrames: assertDataFrameEqual. This function allows for order-independent comparison, making it ideal for testing DataFrame outputs.

# Testing with DataFrame equality

from pyspark.testing.utils import assertDataFrameEqual

result_df = spark.createDataFrame(

[(1, "Alice", 100), (2, "Bob", 200)], ["id", "name", "value"]

)

expected_df = spark.createDataFrame(

[(2, "Bob", 200), (1, "Alice", 100)], ["id", "name", "value"]

)

assertDataFrameEqual(result_df, expected_df)In this example, the test will pass because assertDataFrameEqual ignores the order of the rows.

Another limitation of manual DataFrame comparison is that it cannot detect type mismatch. If the types of the columns are different, the comparison will pass, even if the data is not compatible.

Let’s consider an example:

# Manual DataFrame comparison

result_df = spark.createDataFrame(

[(1, "Alice", 100), (2, "Bob", 200)], ["id", "name", "value"]

)

expected_df = spark.createDataFrame(

[(1, "Alice", 100.0), (2, "Bob", 200.0)], ["id", "name", "value"]

)

assert result_df.collect() == expected_df.collect()In this example, the test will pass, even though the types of the value column are different.

Using assertDataFrameEqual, we can detect type mismatch and ensure that the data is compatible:

assertDataFrameEqual(result_df, expected_df)In this example, the test will fail because the types of the value column are different:

[DIFFERENT_SCHEMA] Schemas do not match.

--- actual

+++ expected

- StructType([StructField('id', LongType(), True), StructField('name', StringType(), True), StructField('value', LongType(), True)])

? ^ ^^

+ StructType([StructField('id', LongType(), True), StructField('name', StringType(), True), StructField('value', DoubleType(), True)])

? ^ ^^^^Conclusion

Manual DataFrame comparison using collect() and equality comparison can lead to brittle tests and unclear error messages. By using assertDataFrameEqual, we can write more robust tests that ignore the order of the rows and detect type mismatch. This approach ensures that our tests are reliable and maintainable, even as our data evolves.