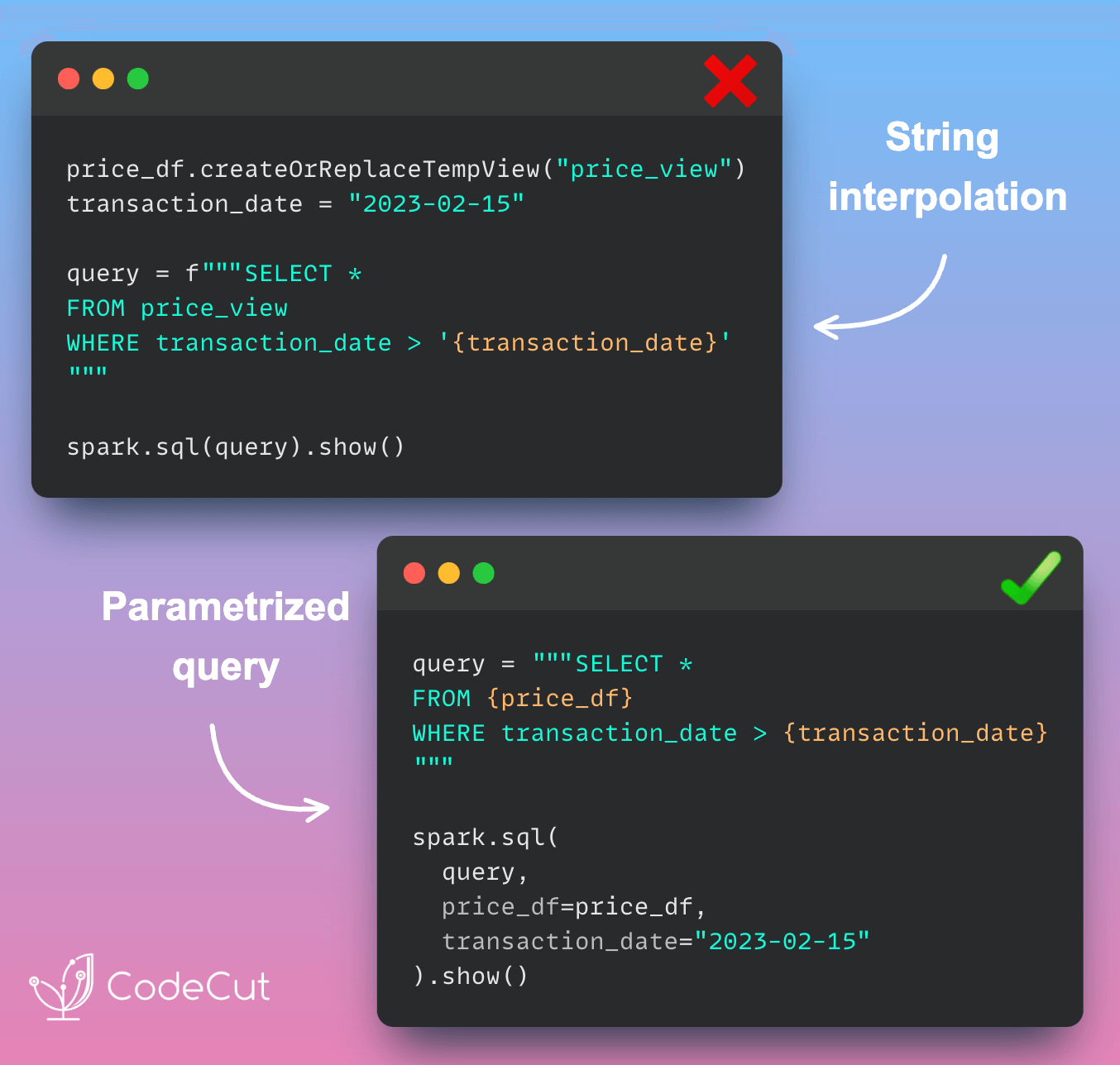

When working with Spark SQL queries, using regular Python string interpolation can lead to security vulnerabilities and require extra steps like creating temporary views. PySpark offers a better solution with parameterized queries, which:

- Protect against SQL injection

- Allow using DataFrame objects directly in queries

- Automatically handle date formatting

- Provide a more expressive way to write SQL queries

Let’s explore this concept with a practical example.

First, we’ll create a Spark DataFrame to work with:

import pandas as pd

from datetime import date

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

item_price_pandas = pd.DataFrame({

"item_id": [1, 2, 3, 4],

"price": [4, 2, 5, 1],

"transaction_date": [

date(2023, 1, 15),

date(2023, 2, 1),

date(2023, 3, 10),

date(2023, 4, 22)

]

})

item_price = spark.createDataFrame(item_price_pandas)

item_price.show()+-------+-----+----------------+

|item_id|price|transaction_date|

+-------+-----+----------------+

| 1| 4| 2023-01-15|

| 2| 2| 2023-02-01|

| 3| 5| 2023-03-10|

| 4| 1| 2023-04-22|

+-------+-----+----------------+Now, let’s compare the traditional approach with PySpark’s parameterized queries.

Traditional approach

This approach requires creating a temporary view and manually formatting the date:

item_price.createOrReplaceTempView("item_price_view")

transaction_date = "2023-02-15"

query = f"""SELECT *

FROM item_price_view

WHERE transaction_date > '{transaction_date}'

"""

spark.sql(query).show()+-------+-----+----------------+

|item_id|price|transaction_date|

+-------+-----+----------------+

| 3| 5| 2023-03-10|

| 4| 1| 2023-04-22|

+-------+-----+----------------+PySpark’s Parameterized Query Approach

This method is more secure and doesn’t require a temporary view:

query = """SELECT *

FROM {item_price}

WHERE transaction_date > {transaction_date}

"""

spark.sql(query, item_price=item_price, transaction_date=transaction_date).show()+-------+-----+----------------+

|item_id|price|transaction_date|

+-------+-----+----------------+

| 3| 5| 2023-03-10|

| 4| 1| 2023-04-22|

+-------+-----+----------------+This method allows for easy parameter substitution and direct use of DataFrames, making your Spark SQL queries both safer and more convenient to write and maintain.