Traditional data storage methods, such as plain Parquet files, are susceptible to partial failures during write operations. This can result in incomplete data files and a lack of clear recovery options in the event of a system crash.

Delta Lake’s write operation with ACID transactions helps solve this by:

- Ensuring either all data is written successfully or none of it is

- Maintaining a transaction log that tracks all changes

- Providing time travel capabilities to recover from failures

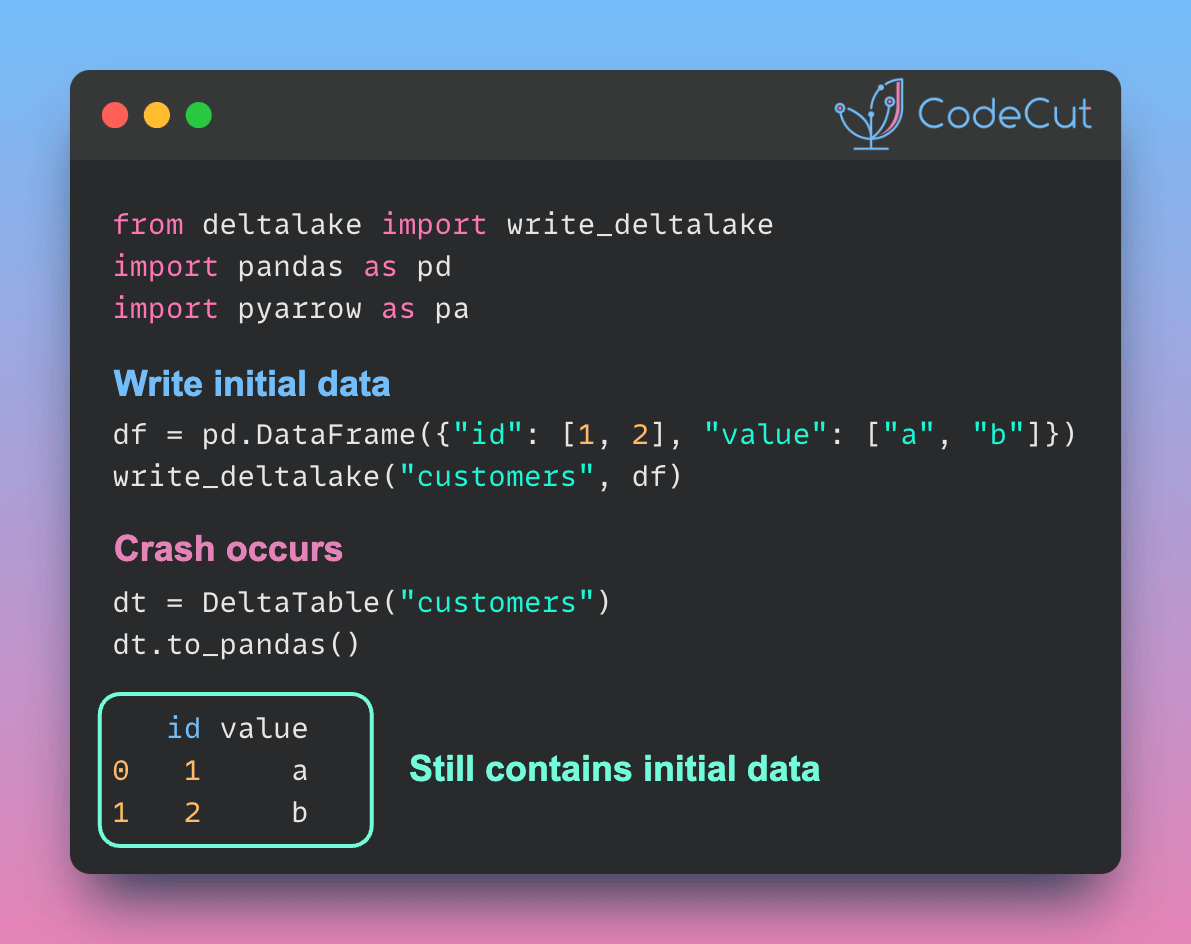

Here’s an example showing Delta Lake’s reliable write operation:

from deltalake import write_deltalake, DeltaTable

import pandas as pd

initial_data = pd.DataFrame({

"id": [1, 2],

"value": ["a", "b"]

})

write_deltalake("customers", initial_data)If the append operation fails halfway, Delta Lake’s transaction log ensures that the table remains in its last valid state.

try:

# Simulate a large append that fails halfway

new_data = pd.DataFrame({

"id": range(3, 1003), # 1000 new rows

"value": ["error"] * 1000

})

# Simulate system crash during append

raise Exception("System crash during append!")

write_deltalake("customers", new_data, mode="append")

except Exception as e:

print(f"Write failed: {e}")

# Check table state - still contains only initial data

dt = DeltaTable("customers")

print("\nTable state after failed append:")

print(dt.to_pandas())

# Verify version history

print(f"\nCurrent version: {dt.version()}")Output:

Write failed: System crash during append!

Table state after failed append:

id value

0 1 a

1 2 b

Current version: 0