Table of Contents

- Introduction

- Introduction to DeepSeek and LangChain

- LangChain + DeepSeek: Integration Tutorial

- Combining Ollama and DeepSeek in LangChain

- Conclusion

Introduction

Data science workflows require both technical precision and clear communication. Many data science tasks need more than text generation—they require step-by-step problem solving. For example:

Debugging requires tracing logic, spotting data issues, and interpreting metrics.

Explaining features means sequencing steps clearly so that non-technical stakeholders understand.

Most language models have trouble with data science reasoning tasks. They often miss statistical concepts or create unreliable solutions. DeepSeek models focus on reasoning and coding, solving analytical problems systematically while producing dependable code for data applications. LangChain connects these models to production workflows, handling prompts, responses, and output parsing.

Key Takeaways

Here’s what you’ll learn:

- Access DeepSeek’s reasoning-focused models through familiar LangChain interfaces for better analytical problem solving

- Build production chains with structured prompts, streaming responses, and Pydantic validation

- Deploy models locally via Ollama for sensitive data projects requiring complete privacy

- Choose between DeepSeek-V3 for general tasks vs DeepSeek-R1 for complex reasoning workflows

- Save API costs while maintaining model quality by running smaller reasoning-optimized models

Introduction to DeepSeek and LangChain

Before diving into integration steps, let’s understand both tools and why they work well together.

What is DeepSeek?

DeepSeek offers open-source language models that excel at reasoning and coding tasks. They can be accessed via an API or run locally, making them suitable for working with sensitive data that can’t leave your system.

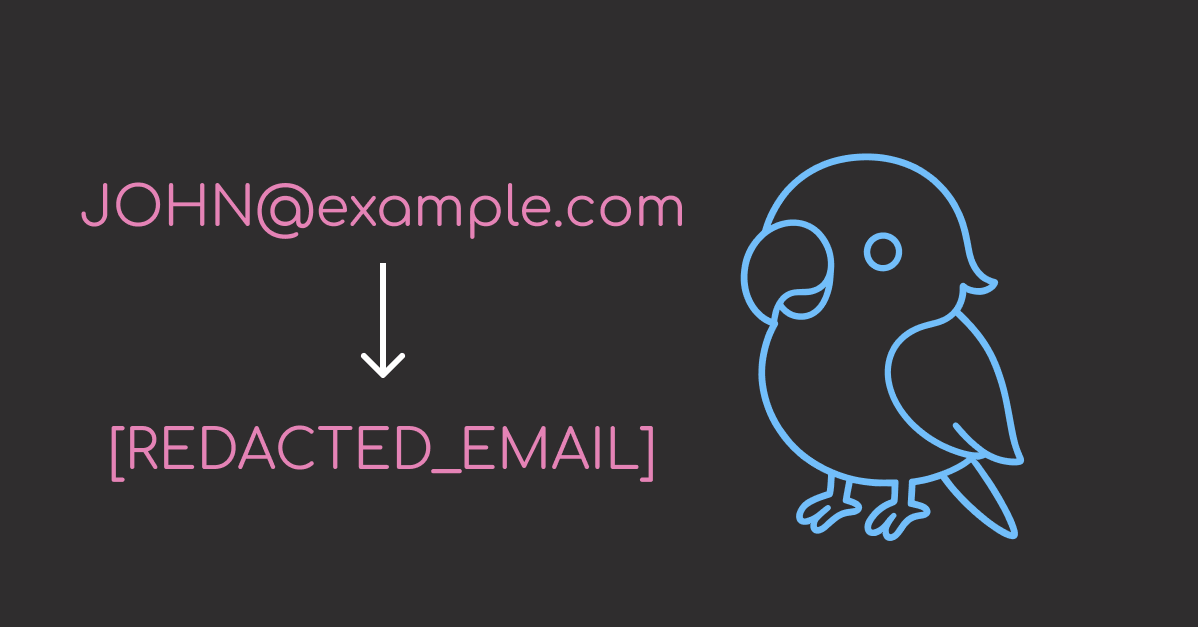

DeepSeek is primarily accessed through its API. You’ll need to create a DeepSeek account, generate an API key, and add a balance. Once you have your API key, set it as an environment variable in a .env file:

# Create .env file if it doesn't exist

# Add the following line to your .env file:

DEEPSEEK_API_KEY="sk-your-api-key-here"

Make sure to load the environment variable in your script:

from dotenv import load_dotenv

load_dotenv() # Load your API key

What is LangChain?

LangChain is a framework for creating AI applications using language models.

Rather than writing custom code for model interactions, response handling, and error management, you can use LangChain’s ready-made components to build applications.

To get started with LangChain and DeepSeek integration, install:

pip install langchain langchain-deepseek

In this installation:

langchain provides the core framework

langchain-deepseek enables DeepSeek model integration

Why combine DeepSeek with LangChain?

DeepSeek’s models excel at reasoning-heavy tasks, but integrating them into larger workflows can be challenging without proper tooling. LangChain provides the infrastructure to build production-ready applications around DeepSeek’s capabilities. You can create chains that validate inputs, format prompts properly, parse structured outputs, and handle errors gracefully. This combination gives you both powerful reasoning models and the tools to deploy them reliably.

LangChain + DeepSeek: Integration Tutorial

Now that we understand both tools, let’s build a complete integration that puts DeepSeek’s reasoning capabilities to work in your data science projects.

Using DeepSeek Chat Models

DeepSeek offers two main model families through their API:

- DeepSeek-V3 (

model="deepseek-chat") – general purpose model with tool calling and structured output - DeepSeek-R1 (

model="deepseek-reasoner") – focused on reasoning tasks

After setting up your environment, you’ll need to select a model for your tasks. Here’s how to use the chat model with LangChain:

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_deepseek import ChatDeepSeek

# Initialize the chat model

llm = ChatDeepSeek(

model="deepseek-chat", # Can also use "deepseek-reasoner"

temperature=0, # 0 for more deterministic responses

max_tokens=None, # None means model default

timeout=None, # API request timeout

max_retries=2, # Retry failed requests

)

# Create a conversation with system and user messages

messages = [

SystemMessage(

content="You are a data scientist who writes efficient Python code"

),

HumanMessage(content="Given a DataFrame with columns 'product' and 'sales', calculates the total sales for each product."),

]

# Generate a response

response = llm.invoke(messages)

print(response.content)

You can also use asynchronous operations for handling multiple requests without blocking:

async def generate_async():

response = await llm.ainvoke(messages)

return response.content

Async operations let you process multiple DeepSeek requests in parallel, which helps when running batch operations. This approach reduces total waiting time compared to sequential processing, making it valuable for pipelines that need to scale.

Building Chains with DeepSeek

Individual model calls work for simple tasks, but data science workflows usually need multiple steps: input validation, prompt formatting, model inference, and output processing. Manual management of these steps becomes tedious and error-prone in larger projects.

LangChain lets you connect components into chains. Here’s how to build a translation chain with DeepSeek:

from langchain_core.prompts import ChatPromptTemplate

# Create a structured prompt template

prompt = ChatPromptTemplate.from_messages(

[

("system", "You are a data scientist who writes {language} code"),

("human", "{input}"),

]

)

# Build the chain

chain = prompt | llm

# Execute the chain

result = chain.invoke(

{

"language": "SQL",

"input": "Given a table with columns 'product' and 'sales', calculates the total sales for each product.",

}

)

print(result.content)

Let’s break down this code:

ChatPromptTemplate.from_messages()creates a template with placeholders in curly braces ({input_language}). These placeholders get replaced with actual values when the chain runs.The prompt template contains tuples for different message types –

("system", "...")for system instructions and("human", "...")for user messages.The pipe operator (

|) connects components. When you writeprompt | llm, it means “send the output of prompt to llm as input.” This creates a processing pipeline.chain.invoke()runs the chain with a dictionary of values that replace the placeholders. The dictionary keys match the placeholder names in the template.Behind the scenes, LangChain formats the messages, sends them to DeepSeek, and returns the model’s response.

This approach lets you build complex workflows while keeping your code clean and maintainable.

Streaming Responses

When working with complex data analysis or long-form explanations, users often wait several seconds for complete responses. This creates a poor experience in interactive applications like data exploration notebooks or real-time dashboards.

For this reason, you might want to stream tokens as they’re generated:

from langchain_core.output_parsers import StrOutputParser

streamed_chain = prompt | llm | StrOutputParser()

for chunk in streamed_chain.stream(

{

"language": "SQL",

"input": "Given a table with columns 'product' and 'sales', calculates the total sales for each product.",

}

):

print(chunk, end="", flush=True)

In the streaming example above, several key elements work together:

The

StrOutputParser()converts the model’s message output into plain text strings.The chain uses the

stream()method instead ofinvoke()to receive content in chunks.The for-loop processes each text fragment as it arrives:

print(chunk, end="")displays the text without adding newlines between chunksflush=Trueensures text appears immediately without buffering

When you run this code, you’ll see words appear progressively rather than waiting for the entire response to complete.

Structured Output

Data science applications often need to extract specific information from model responses and feed it into other systems. Free-text responses make this difficult because parsing natural language output reliably requires additional processing and error handling.

When you need structured data instead of free text, you can use DeepSeek’s structured output capability with LangChain:

from typing import List

from langchain_core.pydantic_v1 import BaseModel, Field

# Define the output schema

class ApplicantProfile(BaseModel):

first_name: str

last_name: str

experience_years: int

primary_skill: List[str]

# Bind the Pydantic model to the LLM for structured output

structured_llm = llm.with_structured_output(ApplicantProfile)

# Create a chain

prompt_structured = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a helpful asssitant. Provide your output in the requested structured format.",

),

(

"human",

"Extract name, years of experience, and primary skill from {job_description}.",

),

]

)

chain_structured = prompt_structured | structured_llm

# Get structured output

job_description = "Khuyen Tran is a data scientist with 5 years of experience, skilled in Python and machine learning."

profile = chain_structured.invoke({"job_description": job_description})

print(f"First name: {profile.first_name}")

print(f"Last name: {profile.last_name}")

print(f"Years of experience: {profile.experience_years}")

print(f"Primary skills: {profile.primary_skill}")

This example defines a MovieReview Pydantic model and uses with_structured_output to instruct the DeepSeek model to return data in this format. This is very useful for data extraction and building predictable AI workflows.

Combining Ollama and DeepSeek in LangChain

While DeepSeek’s cloud API provides powerful reasoning capabilities, some data science projects involve sensitive data that cannot leave your infrastructure. Regulatory requirements, proprietary datasets, or security policies may prevent you from using external APIs entirely.

While this tutorial focuses on the DeepSeek API, it’s worth noting that DeepSeek models can also be run locally using Ollama. This offers a way to keep data private.

Running DeepSeek Locally with Ollama

Setting up local AI inference involves downloading large model files and configuring your system properly. Ollama simplifies this process by handling model management and serving automatically:

# Pull the DeepSeek model to your local machine (if you have Ollama installed)

ollama pull deepseek-r1:1.5b # Example, other versions may be available

Once downloaded, you can access it through LangChain just like any other Ollama model:

from langchain_ollama import ChatOllama

local_deepseek_ollama = ChatOllama(

model="deepseek-r1:1.5b", temperature=0.7, base_url="http://localhost:11434"

)

response_local = local_deepseek_ollama.invoke(

[

SystemMessage(

content="You are a data scientist who writes efficient Python code"

),

HumanMessage(

content="Given a DataFrame with columns 'product' and 'sales', calculates the total sales for each product."

),

]

)

print("Response from local DeepSeek (via Ollama):")

print(response_local.content)

The only difference is the base URl, which will be a localhost link to the running Ollama instance, usually at port 11434.

Performance and cost considerations for DeepSeek API vs. local Ollama

When building data science applications, choosing between API-based and local deployment involves trade-offs between performance, cost, privacy, and maintenance complexity.

The following table shows how DeepSeek’s cloud API compares to local deployment with Ollama:

| Feature | DeepSeek API (Cloud) | Ollama (Local) |

|---|---|---|

| Best For | Fast prototyping, zero setup, latest models | Privacy, offline use, cost-efficient production |

| Hardware | None required | High RAM (16GB+) and disk space |

| Setup | Instant access, no setup | Requires installation and model downloads (7GB+) |

| Cost | ~$0.001–0.002 per 1K tokens | Free after setup |

| Scalability | Scales automatically | Limited by local resources |

| Privacy | Data leaves infrastructure (potential risk) | Full data control and offline capability |

| Maintenance | None | User-managed |

| Recommendation | Use for testing and complex reasoning | Use for production with sensitive or heavy data |

Recommendation for data science teams:

- Start with the API for prototyping and experimentation

- Move to local deployment for production applications with sensitive data or high-volume processing

- Use a hybrid approach with local models for routine tasks and an API for complex reasoning that benefits from larger models

Conclusion

This tutorial provided a comprehensive guide to integrating LangChain with DeepSeek. You’ve learned how to use DeepSeek’s API for chat, chaining, streaming, and structured output. We also briefly covered running DeepSeek locally via Ollama, offering a path to privacy-enhanced AI. By combining LangChain’s framework with DeepSeek’s powerful models, you are well-equipped to build sophisticated data science applications.