Motivation

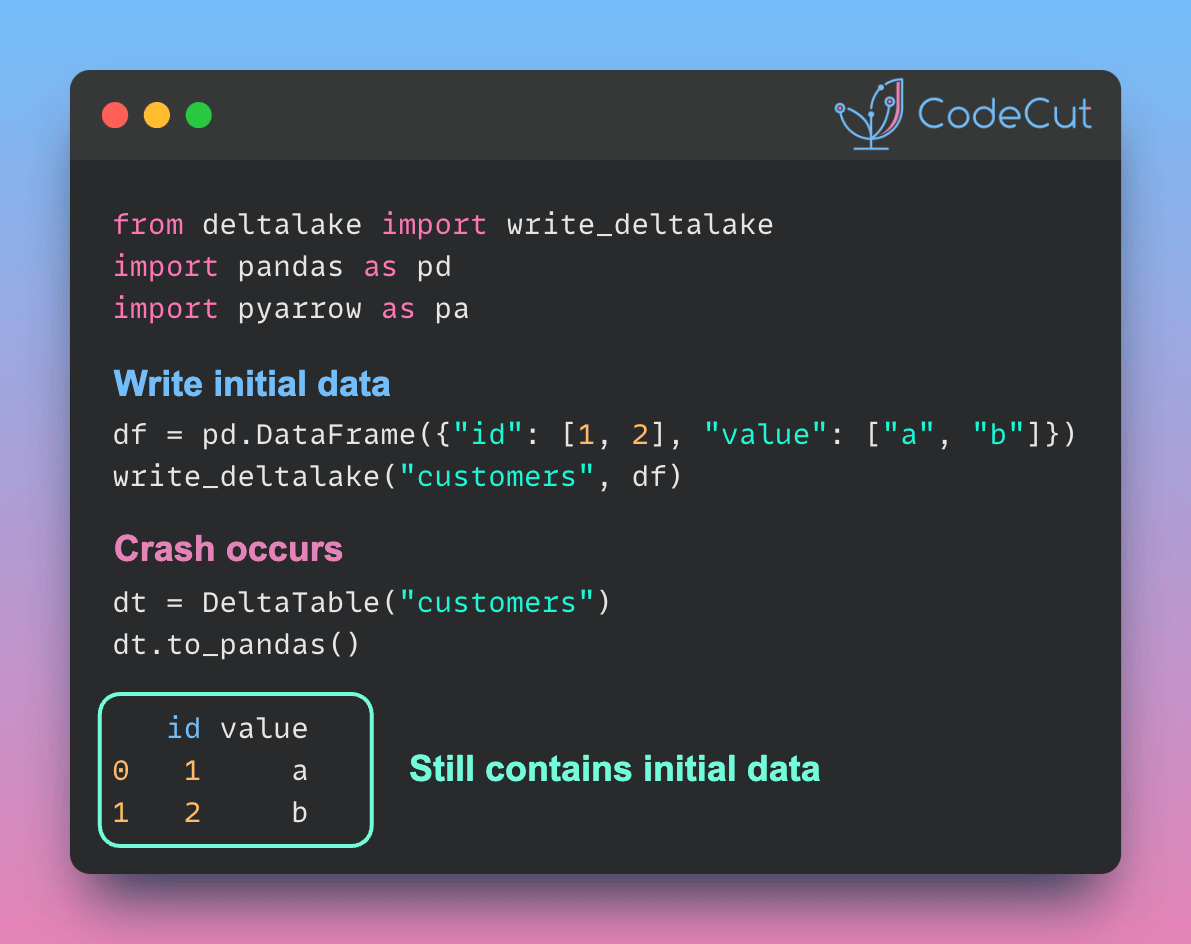

Traditional data deletion approaches are problematic for data engineers and analysts because they require loading entire datasets into memory and risk data corruption during modification.

To demonstrate these issues, let’s first create a sample dataset that resembles a real-world customer database with information about account types, balances, and activity metrics:

import pandas as pd

import numpy as np

# Create a large example dataset (1 million rows)

df = pd.DataFrame({

'customer_id': range(1_000_000),

'status': ['active', 'inactive'] * (1_000_000 // 2),

'balance': np.random.uniform(0, 10000, 1_000_000),

})

df.to_parquet('customer_data.parquet')Now let’s examine the typical approach used to delete records from this dataset. Imagine we need to remove all basic accounts with low balances and minimal activity – a common data maintenance task:

# Traditional problematic approach

try:

# 1. Load entire file (1M rows) into memory

data = pd.read_parquet('customer_data.parquet') # Memory intensive

# 2. Filter out inactive basic accounts with low balance

data = data[~((data['status'] == 'inactive') &

(data['balance'] < 100))]

# 3. Overwrite file - risk of corruption if process fails

data.to_parquet('customer_data.parquet') # Unsafe

except MemoryError:

print("Not enough memory to process 1M rows")This approach has several critical issues:

- Memory Usage: The entire dataset must be loaded into memory, which can cause OOM (Out of Memory) errors with large datasets

- Data Corruption: If the process fails during the write operation, the data file could be left in a corrupted state

- No Audit Trail: No record of what data was deleted or when

- No Recovery: Once data is overwritten, there’s no way to recover the previous state

Introduction to delta-rs

delta-rs is a native Rust library for Delta Lake with Python bindings that provides robust data manipulation capabilities. Install it using:

pip install deltalakeAs covered in the article about efficient data appending, delta-rs provides efficient data modification capabilities without loading entire datasets into memory.

Predicate-based Deletion

Building on delta-rs’s partitioning capabilities and change tracking, the delete operation allows efficient removal of specific records.

First, let’s recreate our test dataset in the Delta Lake format:

from deltalake import write_deltalake, DeltaTable

import pandas as pd

import numpy as np

# Create a large example dataset (1 million rows)

df = pd.DataFrame({

'customer_id': range(1_000_000),

'status': ['active', 'inactive'] * (1_000_000 // 2),

'balance': np.random.uniform(0, 10000, 1_000_000),

})

# Write to Delta format

write_deltalake("./customer_data", df)Now, instead of using the problematic traditional approach, we can leverage delta-rs to handle the deletion in a much safer and more efficient way:

# Perform safe deletion

dt = DeltaTable("./customer_data")

dt.delete("""

status = 'inactive' AND

balance < 100.0

""")When running this operation, delta-rs provides detailed metrics about the deletion process:

{'num_added_files': 1,

'num_removed_files': 1,

'num_deleted_rows': 5019,

'num_copied_rows': 994981,

'execution_time_ms': 220121,

'scan_time_ms': 69760,

'rewrite_time_ms': 148}This approach provides several safety guarantees:

- Time Travel: Previous versions can be accessed if needed

- Memory Efficient: Only metadata and necessary data blocks are loaded

- Transactional: Changes are atomic – either complete successfully or not at all

- Audit Trail: All operations are logged in the transaction log

You can verify the operation and access previous versions:

# Check operation history

print("Operation History:")

for entry in dt.history():

print(f"Version {entry['version']}: {entry['operation']}")

# Access previous version if needed

dt_previous = DeltaTable("./customer_data", version=dt.version() - 1)

print("\nPrevious version data:")

print(dt_previous.to_pandas().head(10))Output:

Operation History:

Version 1: DELETE

Version 0: CREATE TABLE

Previous version data:

customer_id status balance

0 0 active 2281.154365

1 1 inactive 8330.057614

2 2 active 9908.828674

3 3 inactive 3766.795979

4 4 active 8536.698571

5 5 inactive 8681.325433

6 6 active 1610.351636

7 7 inactive 2537.934431

8 8 active 4278.094055

9 9 inactive 3531.708249Conclusion

The predicate-based deletion feature in delta-rs transforms a potentially dangerous operation into a safe, transactional process. When combined with its partition overwrite capabilities, it provides a comprehensive solution for data management.