Motivation

Getting started is often the most challenging part when building ML projects. How should you structure your repository? Which standards should you follow? Will your teammates be able to reproduce the results of your experimentations?

Instead of trying to find an ideal repository structure, wouldn’t it be nice to have a template to get started?

That is why I created data-science-template, consolidating best practices I’ve learned over the years about structuring data science projects.

This template allows you to:

✅ Create a readable structure for your project

✅ Efficiently manage dependencies in your project

✅ Create short and readable commands for repeatable tasks

✅ Rerun only modified components of a pipeline

✅ Observe and automate your code

✅ Enforce type hints at runtime

✅ Check issues in your code before committing

✅ Automatically document your code

✅ Automatically run tests when committing your code

Tools Used in This Template

This template is lightweight and uses only tools that can generalize to various use cases. Those tools are:

- Poetry: manage Python dependencies

- Prefect: orchestrate and observe your data pipeline

- Pydantic: validate data using Python type annotations

- pre-commit plugins: ensure your code is well-formatted, tested, and documented, following best practices

- Makefile: automate repeatable tasks using short commands

- GitHub Actions: automate your CI/CD pipeline

- pdoc: automatically create API documentation for your project

Usage

To download the template, start by installing Cookiecutter:

pip install cookiecutterCreate a project based on the template:

cookiecutter https://github.com/khuyentran1401/data-science-templateTry out the project by following these instructions.

In the following few sections, we will detail some valuable features of this template.

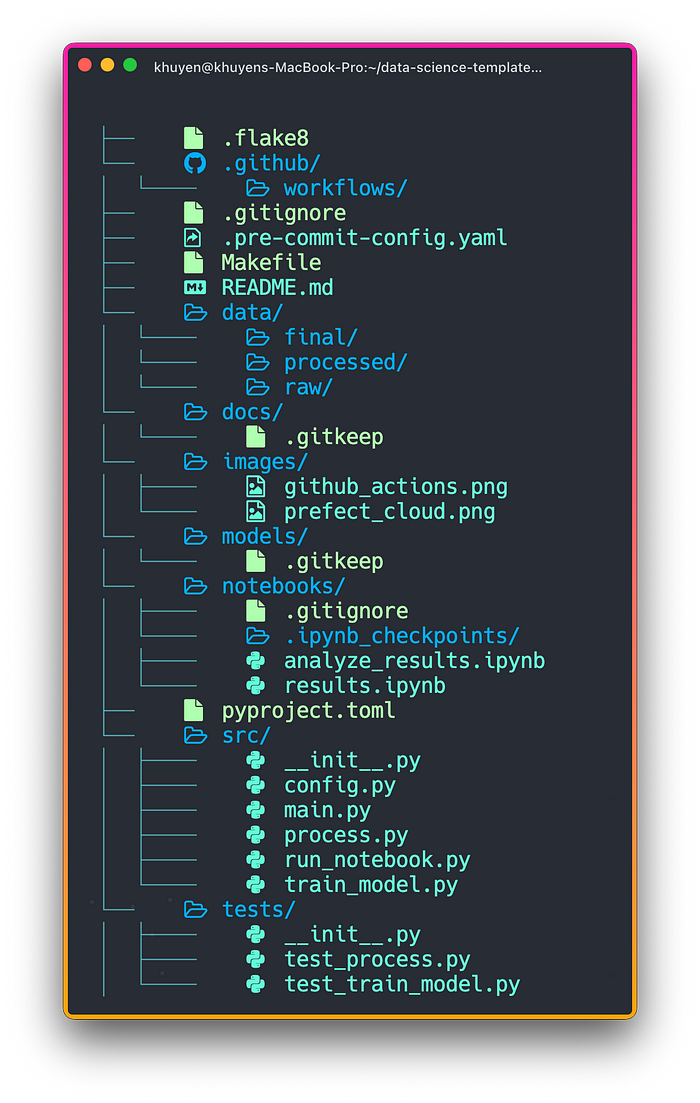

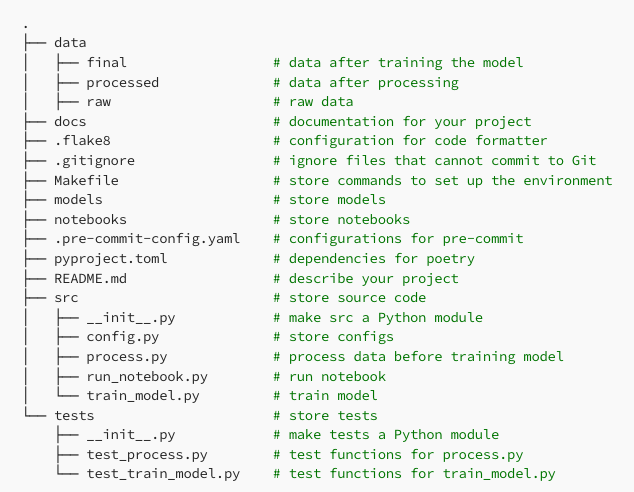

Create a Readable Structure

The structure of the project created from the template is standardized and easy to understand.

Here is the summary of the roles of these files:

Efficiently Manage Dependencies

Poetry is a Python dependency management tool and is an alternative to pip.

With Poetry, you can:

- Separate the main dependencies and the sub-dependencies into two separate files (instead of storing all dependencies in

requirements.txt) - Remove all unused sub-dependencies when removing a library

- Avoid installing new packages that conflict with the existing packages

- Package your project in several lines of code

and more.

Find the instruction on how to install Poetry here.

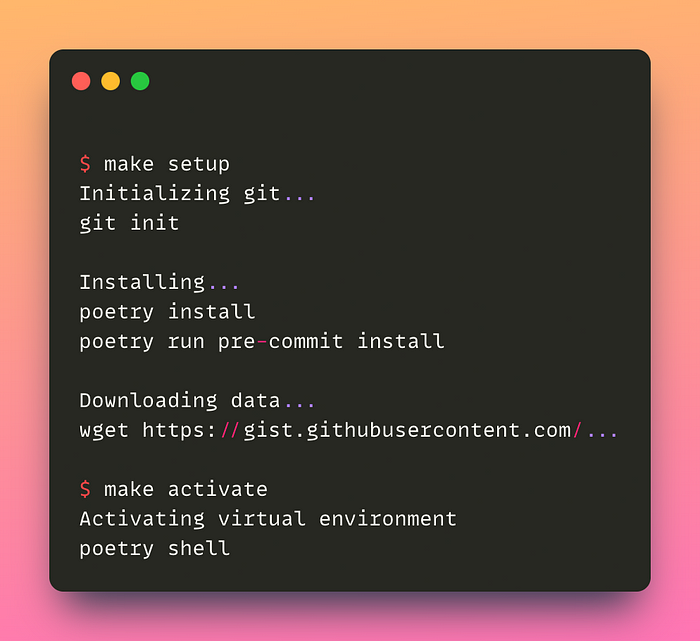

Create Short Commands for Repeatable Tasks

Makefile allows you to create short and readable commands for tasks. If you are not familiar with Makefile, check out this short tutorial.

You can use Makefile to automate tasks such as setting up the environment:

initialize_git:

@echo "Initializing git..."

git init

install:

@echo "Installing..."

poetry install

poetry run pre-commit install

activate:

@echo "Activating virtual environment"

poetry shell

download_data:

@echo "Downloading data..."

wget https://gist.githubusercontent.com/khuyentran1401/a1abde0a7d27d31c7dd08f34a2c29d8f/raw/da2b0f2c9743e102b9dfa6cd75e94708d01640c9/Iris.csv -O data/raw/iris.csv

setup: initialize_git install download_dataNow, whenever others want to set up the environment for your projects, they just need to run the following:

make setup

make activateAnd a series of commands will be run!

Rerun Only Modified Components of a Pipeline

Make is also useful when you want to run a task whenever its dependencies are modified.

As an example, let’s capture the connection between files in the following diagram through a Makefile:

data/processed/xy.pkl: data/raw src/process.py

@echo "Processing data..."

python src/process.py

models/svc.pkl: data/processed/xy.pkl src/train_model.py

@echo "Training model..."

python src/train_model.py

pipeline: data/processed/xy.pkl models/svc.pkTo create the file models/svc.pkl , you can run:

make models/svc.pklSince data/processed/xy.pkl and src/train_model.py are the prerequisites of the models/svc.pkl target, make runs the recipes to create both data/processed/xy.pkl and models/svc.pkl .

Processing data...

python src/process.py

Training model...

python src/train_model.pyIf there are no changes in the prerequisite of models/svc.pkl, make will skip updating models/svc.pkl .

$ make models/svc.pkl

make: `models/svc.pkl' is up to date.Thus, with make, you avoid wasting time on running unnecessary tasks.

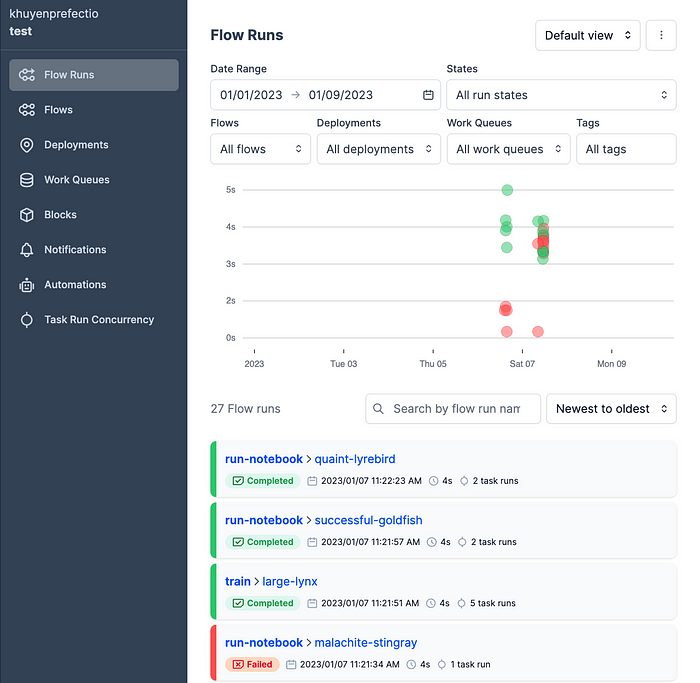

Observe and Automate Your Code

This template leverages Prefect to:

- Observe all your runs from the Prefect UI.

Among others, Prefect can help you:

- Retry when your code fails

- Schedule your code run

- Send notifications when your flow fails

You can access these features by simply turning your function into a Prefect flow.

from prefect import flow

@flow

def process(

location: Location = Location(),

config: ProcessConfig = ProcessConfig(),

):

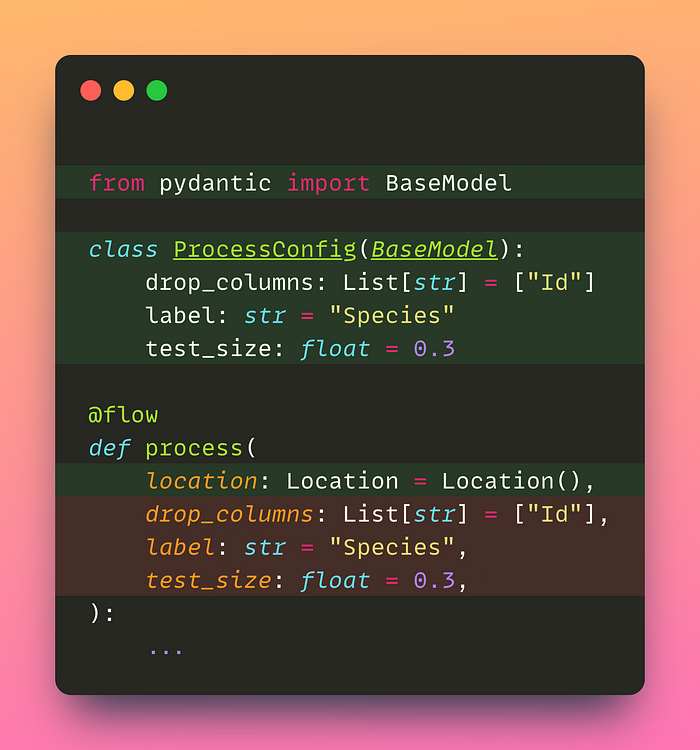

...Enforce Type Hints At Runtime

Pydantic is a Python library for data validation by leveraging type annotations.

Pydantic models enforce data types on flow parameters and validate their values when a flow run is executed.

If the value of a field doesn’t match the type annotation, you will get an error at runtime:

process(config=ProcessConfig(test_size='a'))pydantic.error_wrappers.ValidationError: 1 validation error for ProcessConfig

test_size

value is not a valid float (type=type_error.float)All Pydantic models are in the src/config.py file.

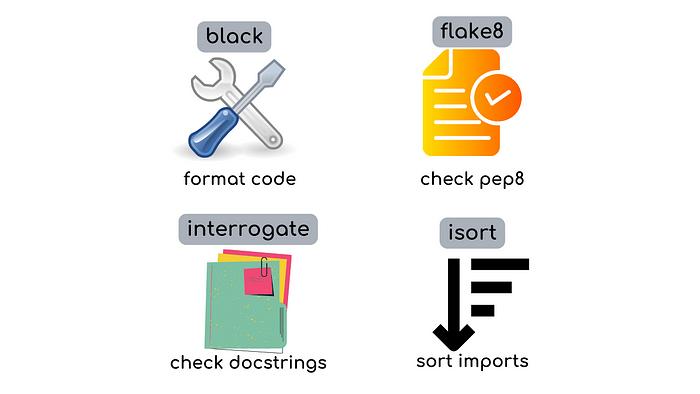

Detect Issues in Your Code Before Committing

Before committing your Python code to Git, you need to make sure your code:

- passes unit tests

- is organized

- conforms to best practices and style guides

- is documented

However, manually checking these criteria before committing your code can be tedious. pre-commit is a framework that allows you to identify issues in your code before committing it.

You can add different plugins to your pre-commit pipeline. Once your files are committed, they will be validated against these plugins. Unless all checks pass, no code will be committed.

You can find all plugins used in this template in this .pre-commit-config.yaml file.

Automatically Document Your Code

Data scientists often collaborate with other team members on a project. Thus, it is essential to create good documentation for the project.

To create API documentation based on docstrings of your Python files and objects, run:

make docs_viewOutput:

Save the output to docs...

pdoc src --http localhost:8080

Starting pdoc server on localhost:8080

pdoc server ready at http://localhost:8080Now you can view the documentation on http://localhost:8080.

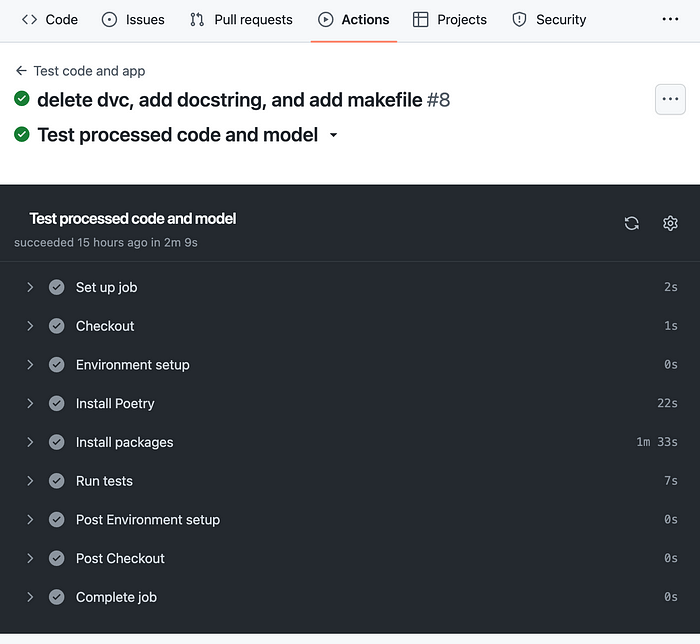

Automatically Run Tests

GitHub Actions allows you to automate your CI/CD pipelines, making it faster to build, test, and deploy your code.

When creating a pull request on GitHub, the tests in your tests folder will automatically run.

Conclusion

Congratulations! You have just learned how to use a template to create a reusable and maintainable ML project. This template is meant to be flexible. Feel free to adjust the project based on your applications.