Challenges in Anomaly Detection

Anomaly detection can be challenging and time-consuming, requiring complex statistical calculations and multiple algorithm implementations. Without specialized tools, data scientists may write redundant code or miss important anomalies using overly simplistic approaches.

Here’s an example of a simple statistical method (z-score) for detecting outliers:

import numpy as np

def detect_outliers(data):

mean = np.mean(data)

std = np.std(data)

z_scores = [(x - mean) / std for x in data]

outliers = [x for x, z in zip(data, z_scores) if abs(z) > 3]

return outliers

data = [1, 2, 2, 3, 3, 4, 1000, 2, 3]

outliers = detect_outliers(data)

print(f"{outliers=}")

Output:

mean=113.333

std=313.485

outliers=[]However, this approach can be flawed, as shown in the example where the outlier (1000) is not detected due to its influence on the standard deviation.

PyOD Solution

PyOD provides a consistent API across different algorithms and includes both traditional statistical methods and modern deep-learning approaches. Here’s an example using PyOD:

Importing necessary modules:

from pyod.models.knn import KNN

from pyod.utils.data import generate_data

from pyod.utils.data import evaluate_print

from pyod.utils.example import visualize

Generating sample data:

# Define the percentage of outliers

contamination = 0.1

# Define the number of training and testing points

n_train = 200

n_test = 100

# Generate the sample data

X_train, X_test, y_train, y_test = generate_data(

n_train=n_train, n_test=n_test, contamination=contamination)

Output:

# X_train: (200, 2) - 200 training data points with 2 features each

# X_test: (100, 2) - 100 testing data points with 2 features each

# y_train: (200,) - labels for the training data points (0 for inliers, 1 for outliers)

# y_test: (100,) - labels for the testing data points (0 for inliers, 1 for outliers)

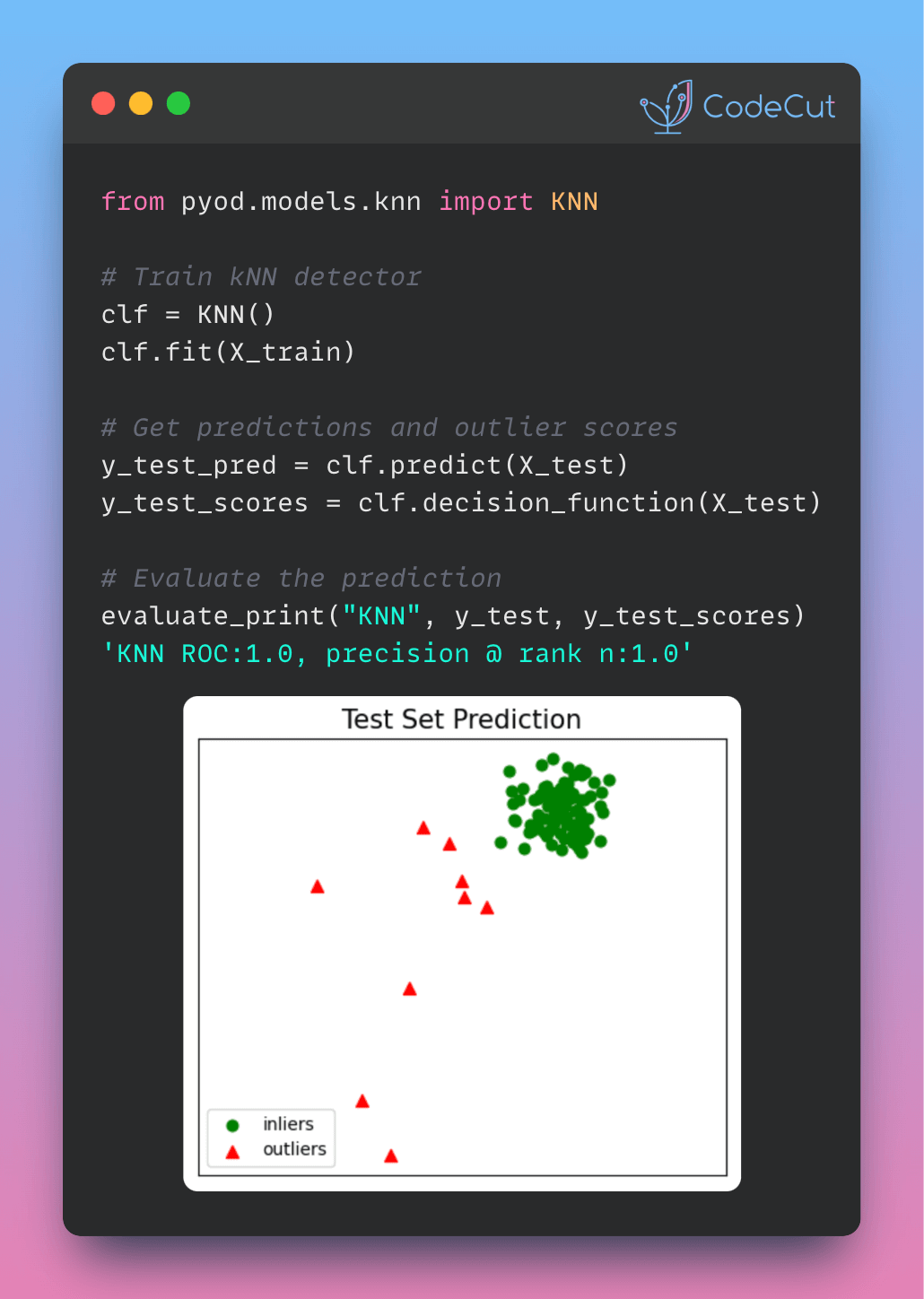

Initializing and fitting the KNN detector:

# Define the name of the detector

clf_name = 'KNN'

# Initialize the KNN detector

clf = KNN()

# Fit the KNN detector to the training data

clf.fit(X_train)

Output:

# clf: A fitted KNN detector object

Evaluating the detector’s performance:

# Evaluate the detector's performance on the training data

print("\nOn Training Data:")

evaluate_print(clf_name, y_train, clf.decision_scores_)

# Evaluate the detector's performance on the testing data

print("\nOn Test Data:")

evaluate_print(clf_name, y_test, clf.decision_function(X_test))

Output:

On Training Data:

KNN ROC:1.0, precision @ rank n:1.0

On Test Data:

KNN ROC:1.0, precision @ rank n:1.0

Visualizing the results:

# Visualize the results

visualize(clf_name, X_train, y_train, X_test, y_test, clf.labels_,

clf.predict(X_test), show_figure=True, save_figure=False)

Output:

Benefits of Using PyOD

PyOD provides several benefits, including:

- Easy implementation: PyOD provides a simple and consistent API across different algorithms, making it easy to implement various state-of-the-art outlier detection methods.

- Multiple algorithms: PyOD includes both traditional statistical methods and modern deep learning approaches, allowing users to choose the best algorithm for their specific use case.

- Multivariate data handling: PyOD can handle multivariate data, making it suitable for a wide range of applications.

- Efficient performance evaluation: PyOD provides efficient performance evaluation metrics, such as ROC and Precision @ Rank n, to help users assess the effectiveness of their chosen algorithm.

By using PyOD, data scientists can easily detect anomalies in their data without writing redundant code or missing important outliers due to simplistic approaches.