Managing data integrity and business rules in pandas DataFrames is often difficult, especially with large datasets or multiple contributors. This can lead to inconsistent or invalid data.

Delta Lake’s constraint feature solves this by enabling table-level rule definition and enforcement. This ensures only data meeting specific criteria can be added to the table.

Let’s look at a practical example:

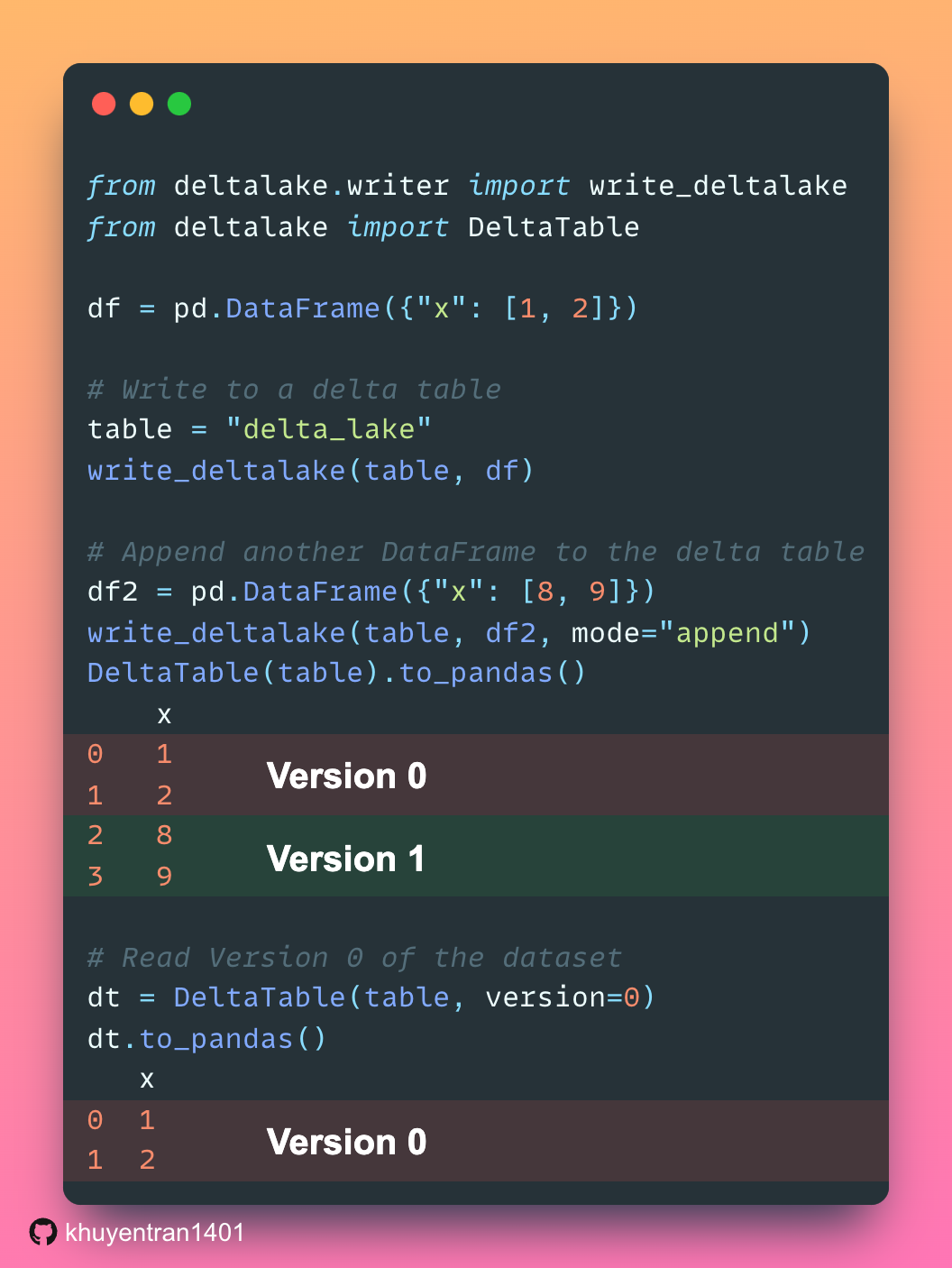

import pandas as pd

from deltalake.writer import write_deltalake

from deltalake import DeltaTable

# Define the path for our Delta Lake table

table_path = "delta_lake"

# Create an initial DataFrame

df1 = pd.DataFrame(

[

(1, "John", 5000),

(2, "Jane", 6000),

],

columns=["employee_id", "employee_name", "salary"],

)

# Write the initial data to Delta Lake

write_deltalake(table_path, df1)

# View the initial data

df

employee_idemployee_namesalary01John500012Jane6000

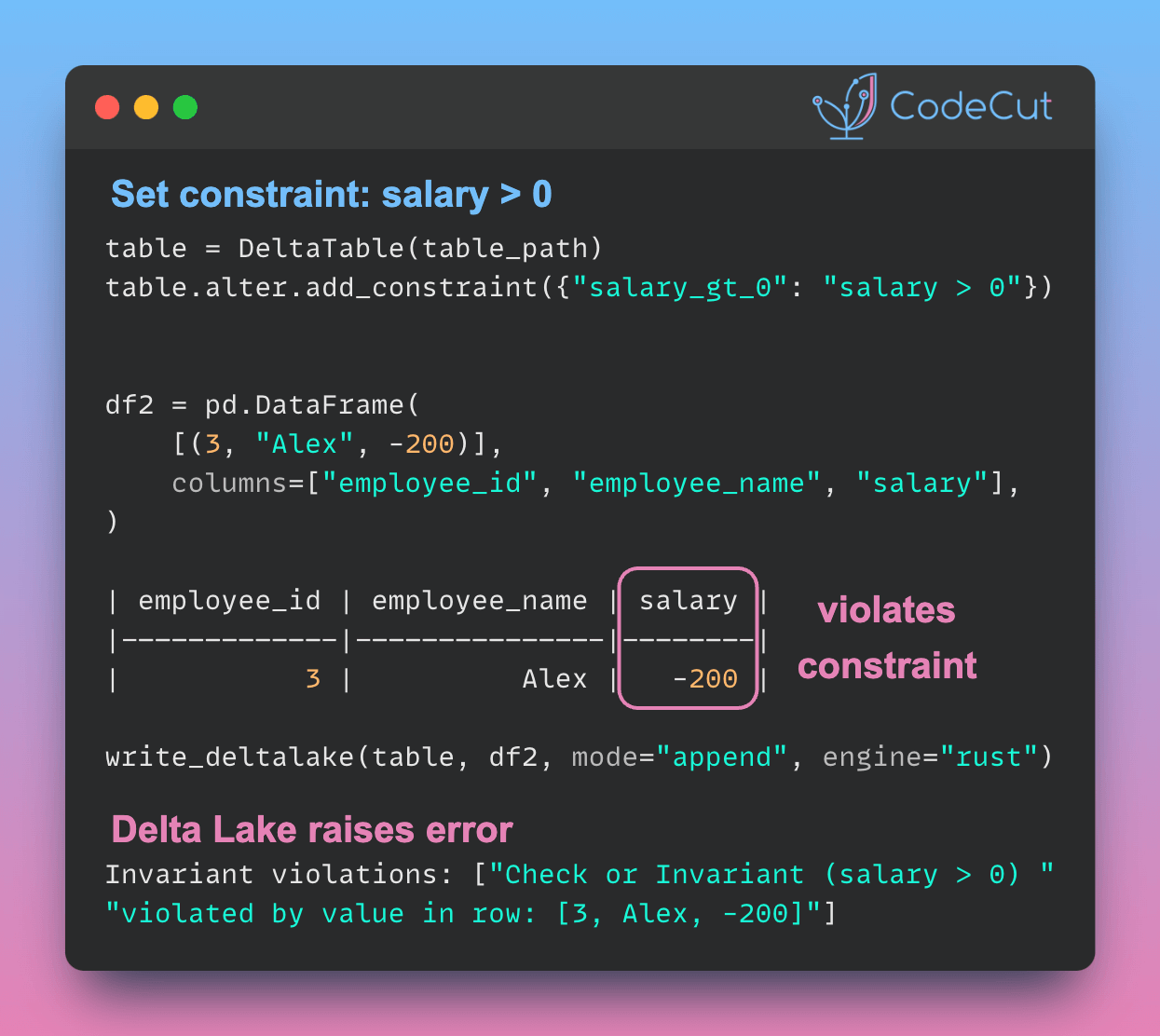

Now, let’s add a constraint to ensure all salaries are positive:

table = DeltaTable(table_path)

table.alter.add_constraint({"salary_gt_0": "salary > 0"})

With this constraint in place, let’s try to add a new record with a negative salary:

df2 = pd.DataFrame(

[(3, "Alex", -200)],

columns=["employee_id", "employee_name", "salary"],

)

write_deltalake(table, df2, mode="append", engine="rust")

Running this code results in an error:

DeltaProtocolError: Invariant violations: ["Check or Invariant (salary > 0) violated by value in row: [3, Alex, -200]"]

As we can see, the constraint we added prevented the insertion of invalid data. This is incredibly powerful because it:

Enforces data integrity at the table level.

Prevents accidental insertion of invalid data.

Maintains consistency across all operations on the table.

Link to delta-rs.

Favorite