Motivation

Data scientists and analysts often need to guide their topic modeling process with domain knowledge or specific themes they want to extract, but traditional topic modeling approaches don’t allow for this kind of control over the generated topics.

Introduction to BERTopic

Topic modeling is a text mining technique that discovers abstract topics in a collection of documents. It helps in organizing, searching, and understanding large volumes of text data by finding common themes or patterns.

BERTopic is a topic modeling library that leverages BERT embeddings and c-TF-IDF to create easily interpretable topics. You can install it using pip:

pip install bertopicAs covered in BERTopic: Harnessing BERT for Interpretable Topic Modeling, the library provides powerful topic visualization and automatic topic discovery. In this post, we will cover guided topic modeling.

Guided Topic Modeling with Seed Words

Seed words are predefined sets of words that represent themes or topics you expect or want to find in your documents. BERTopic allows you to guide the topic modeling process using these seed words. By providing seed words, you can:

- Direct the model towards specific themes of interest

- Incorporate domain expertise into the topic discovery process

- Ensure certain important themes are captured

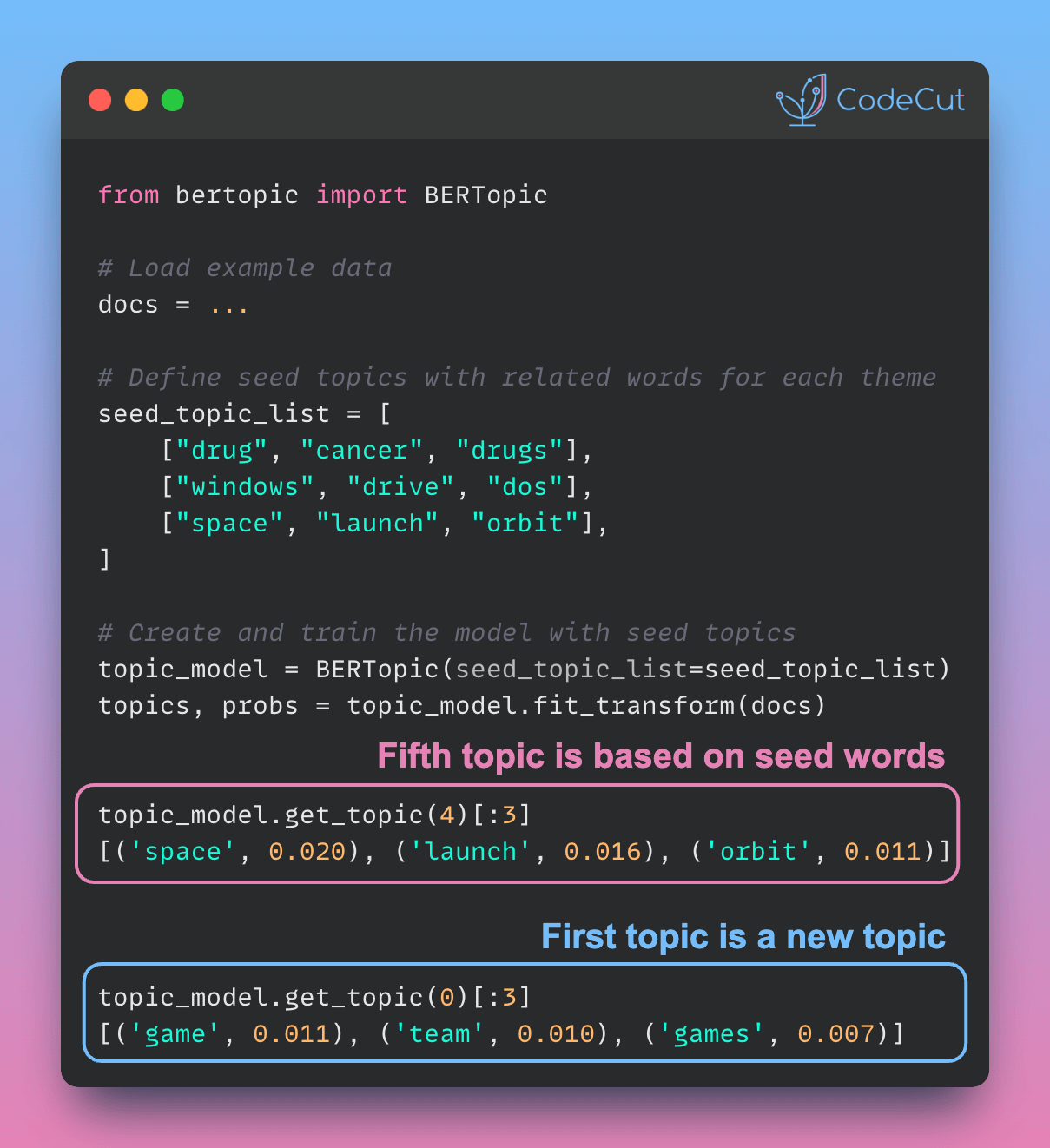

Here’s how to implement guided topic modeling with seed words:

from bertopic import BERTopic

from sklearn.datasets import fetch_20newsgroups

# Load example data

docs = fetch_20newsgroups(subset='all', remove=('headers', 'footers', 'quotes'))['data']

# Define seed topics with related words for each theme

seed_topic_list = [

["drug", "cancer", "drugs", "doctor"], # Medical theme

["windows", "drive", "dos", "file"], # Computer theme

["space", "launch", "orbit", "lunar"] # Space theme

]

# Create and train the model with seed topics

topic_model = BERTopic(seed_topic_list=seed_topic_list)

topics, probs = topic_model.fit_transform(docs)

Let’s examine how the model processes these seed words and discovers topics:

# Examine discovered topics

print("\nFirst topic (Sports):")

print(topic_model.get_topic(0))

print("\nSecond topic (Cryptography):")

print(topic_model.get_topic(1))

print("\nFifth topic (Space Exploration):")

print(topic_model.get_topic(4))

Output:

First topic (Sports):

[('game', 0.010652), ('team', 0.009260), ('games', 0.007348),

('he', 0.007269), ('players', 0.006459), ('season', 0.006363),

('hockey', 0.006247), ('play', 0.005889), ('25', 0.005802),

('year', 0.005770)]

Second topic (Cryptography):

[('key', 0.015048), ('clipper', 0.012965), ('chip', 0.012280),

('encryption', 0.011336), ('keys', 0.010264), ('escrow', 0.008797),

('government', 0.007993), ('nsa', 0.007754), ('algorithm', 0.007132),

('be', 0.006736)]

Fifth topic (Space Exploration):

[('space', 0.019632), ('launch', 0.016378), ('orbit', 0.010814),

('lunar', 0.010734), ('moon', 0.008701), ('nasa', 0.007899),

('shuttle', 0.006732), ('mission', 0.006472), ('earth', 0.006001),

('station', 0.005720)]The results show how seed words influence topic discovery:

- Seed Word Integration: In Topic 5, space-related seed words (‘space’, ‘launch’, ‘orbit’, ‘lunar’) have high weights. The model expands on these words to include related terms like ‘shuttle’, ‘mission’, and ‘station’.

- Natural Topic Discovery: The model discovers prominent topics like sports (Topic 0) and cryptography (Topic 1), despite being seeded with medical and computer themes. This shows that seed words guide the model without constraining it.

Conclusion

Guided topic modeling with seed words in BERTopic offers a powerful way to balance user expertise with automated topic discovery. While seed words help direct the model toward specific themes of interest, the model maintains the flexibility to discover other important topics and expand the seed topics with related terms, resulting in a more comprehensive and nuanced topic analysis.