What is Polars?

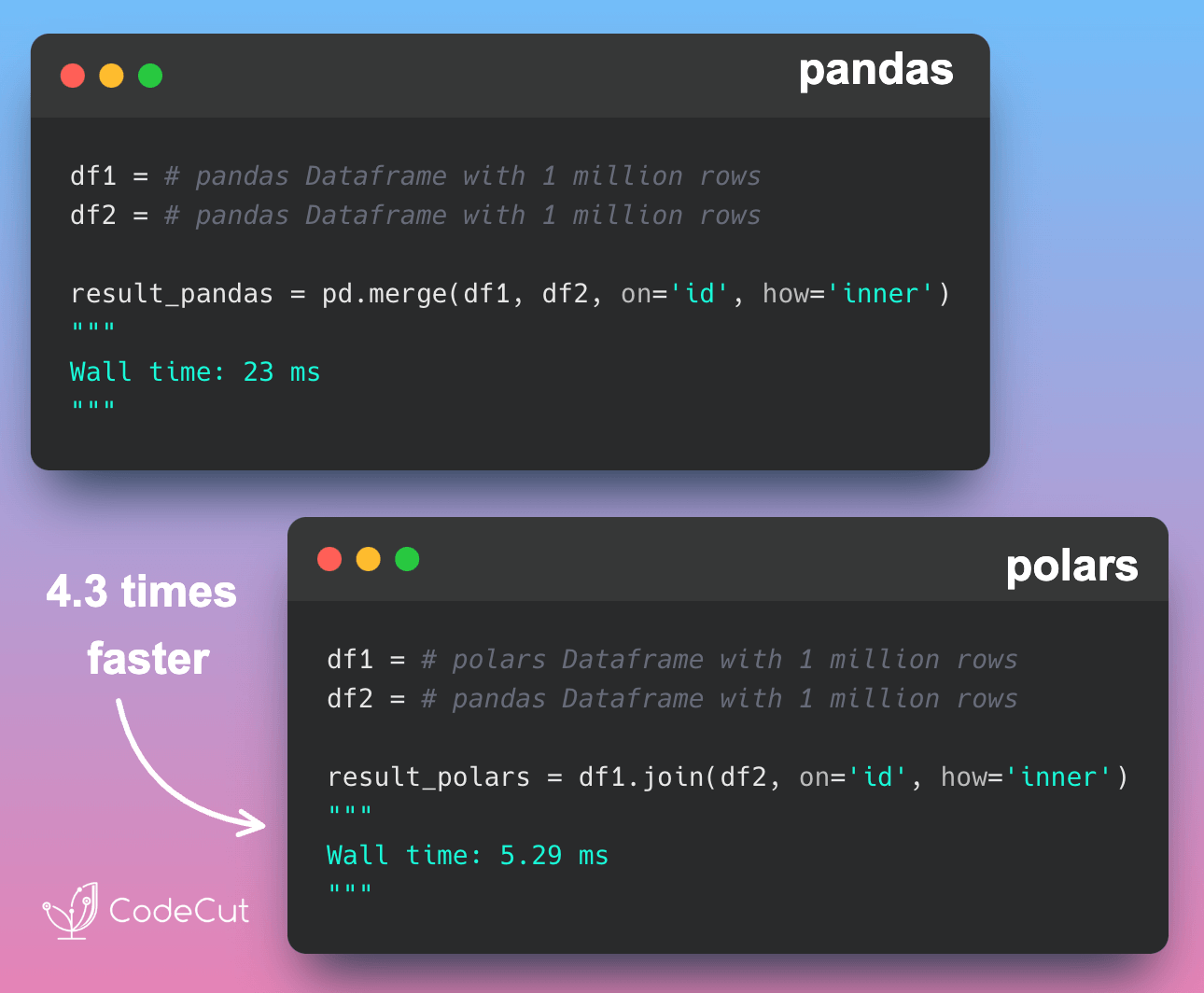

Polars is a Rust-based DataFrame library that is designed for high-performance data manipulation and analysis. It uses SIMD instructions, parallelization, and caching to achieve speeds that are often orders of magnitude faster than other DataFrame libraries.

What is Delta Lake?

Delta Lake is a storage format that offers a range of benefits, including ACID transactions, time travel, schema enforcement, and more. It’s designed to work seamlessly with big data processing engines like Apache Spark and can handle large amounts of data with ease.

How Do Polars and Delta Lake Work Together?

When you combine Polars and Delta Lake, you get a powerful data processing system. Polars does the heavy lifting of processing your data, while Delta Lake keeps everything organized and up-to-date.

Imagine you have a huge dataset with millions of rows. You want to group the data by category and calculate the sum of a certain column. With Polars and Delta Lake, you can do this quickly and easily.

First, you create a sample dataset:

import pandas as pd

import numpy as np

# Create a sample dataset

num_rows = 10_000_000

data = {

'Cat1': np.random.choice(['A', 'B', 'C'], size=num_rows),

'Num1': np.random.randint(low=1, high=100, size=num_rows)

}

df = pd.DataFrame(data)

df.head()| Cat1 | Num1 | |

|---|---|---|

| 0 | A | 84 |

| 1 | C | 63 |

| 2 | B | 11 |

| 3 | A | 73 |

| 4 | B | 57 |

Next, you save the dataset to Delta Lake:

from deltalake.writer import write_deltalake

save_path = "tmp/data"

write_deltalake(save_path, df)Then, you can use Polars to read the data from Delta Lake and perform the grouping operation:

import polars as pl

pl_df = pl.read_delta(save_path)

pl_df.group_by("Cat1").sum()shape: (3, 2)

┌──────┬───────────┐

│ Cat1 ┆ Num1 │

│ --- ┆ --- │

│ str ┆ i64 │

╞══════╪═══════════╡

│ B ┆ 166653474 │

│ C ┆ 166660653 │

│ A ┆ 166597835 │

└──────┴───────────┘Time Travel

One of Delta Lake’s best features is the ability to travel back in time to previous versions of your data. This is called time travel. With Delta Lake, you can easily revert to a previous version of your data if you need to.

Let’s say you want to append some new data to the existing dataset:

new_data = pd.DataFrame({"Cat1": ["B", "C"], "Num1": [2, 3]})

write_deltalake(save_path, new_data, mode="append")Now, you can use Polars to read the updated data from Delta Lake:

updated_pl_df = pl.read_delta(save_path)

updated_pl_df.tail()shape: (5, 2)

┌──────┬──────┐

│ Cat1 ┆ Num1 │

│ --- ┆ --- │

│ str ┆ i64 │

╞══════╪══════╡

│ A ┆ 29 │

│ A ┆ 41 │

│ A ┆ 49 │

│ B ┆ 2 │

│ C ┆ 3 │

└──────┴──────┘But what if you want to go back to the previous version of the data? With Delta Lake, you can easily do that by specifying the version number:

previous_pl_df = pl.read_delta(save_path, version=0)

previous_pl_df.tail()shape: (5, 2)

┌──────┬──────┐

│ Cat1 ┆ Num1 │

│ --- ┆ --- │

│ str ┆ i64 │

╞══════╪══════╡

│ A ┆ 90 │

│ C ┆ 83 │

│ A ┆ 29 │

│ A ┆ 41 │

│ A ┆ 49 │

└──────┴──────┘This will give you the original dataset before the new data is appended.

Links

Want the full walkthrough?

Check out our in-depth guide on Polars vs Pandas: A Fast, Multi-Core Alternative for DataFrames