In PySpark, temporary views are virtual tables that can be queried using SQL, enabling code reusability and modularity.

To demonstrate this, let’s create a PySpark DataFrame called orders_df.

# Create a sample DataFrame

data = [

(1001, "John Doe", 500.0),

(1002, "Jane Smith", 750.0),

(1003, "Bob Johnson", 300.0),

(1004, "Sarah Lee", 400.0),

(1005, "Tom Wilson", 600.0),

]

columns = ["customer_id", "customer_name", "revenue"]

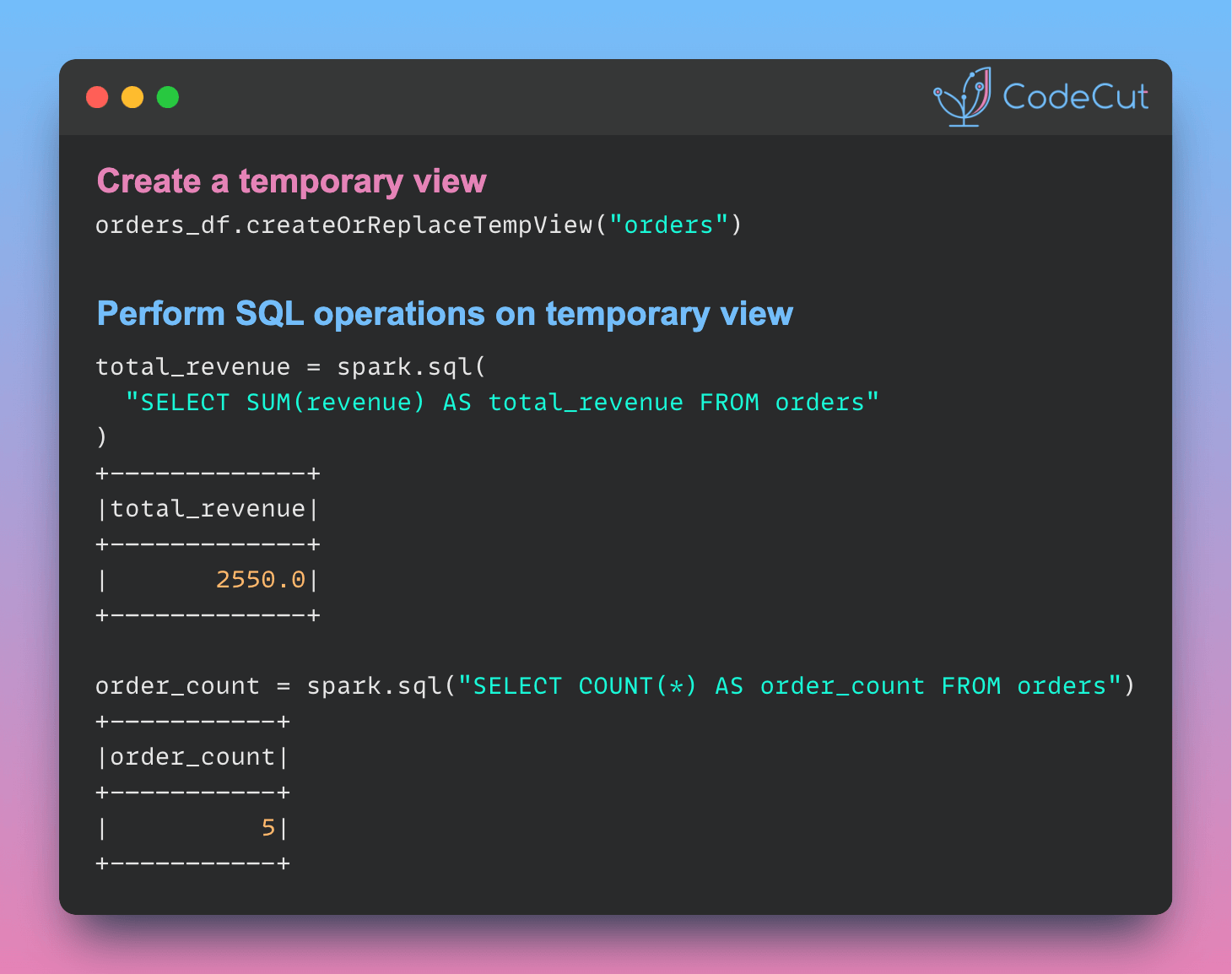

orders_df = spark.createDataFrame(data, columns)Next, create a temporary view called orders from the orders_df DataFrame using the createOrReplaceTempView method.

# Create a temporary view

orders_df.createOrReplaceTempView("orders")With the temporary view created, we can perform various operations on it using SQL queries.

# Perform operations on the temporary view

total_revenue = spark.sql("SELECT SUM(revenue) AS total_revenue FROM orders")

order_count = spark.sql("SELECT COUNT(*) AS order_count FROM orders")

# Display the results

print("Total Revenue:")

total_revenue.show()

print("\nNumber of Orders:")

order_count.show()Output:

Total Revenue:

+-------------+

|total_revenue|

+-------------+

| 2550.0|

+-------------+

Number of Orders:

+-----------+

|order_count|

+-----------+

| 5|

+-----------+