Table of Contents

- Introduction

- Feature Interactions: From Nested Loops to Combinations

- Polynomial Features: From Manual Assignments to Automated Generation

- Sequence Patterns: From Manual Tracking to Permutations

- Cartesian Products: From Nested Loops to Product

- Efficient Sampling: From Full Data Loading to Islice

- Final Thoughts

Introduction

Imagine you write nested loops for combinatorial features and they work great initially. However, as your feature engineering scales, this custom code becomes buggy and nearly impossible to debug or extend.

for i in range(len(numerical_features)):

for j in range(i + 1, len(numerical_features)):

df[f"{numerical_features[i]}_x_{numerical_features[j]}"] = (

df[numerical_features[i]] * df[numerical_features[j]]

)

Itertools provides battle-tested, efficient functions that make data science code faster and more reliable. Here are the five most useful functions for data science projects:

combinations()– Generate unique pairs from lists without repetitioncombinations_with_replacement()– Generate combinations including self-pairspermutations()– Generate all possible orderingsproduct()– Create all possible pairings across multiple listsislice()– Extract slices from iterators without loading full datasets

Stay Current with CodeCut

Actionable Python tips, curated for busy data pros. Skim in under 2 minutes, three times a week.

Key Takeaways

Here’s what you’ll learn:

- Replace complex nested loops with battle-tested itertools functions for feature interactions

- Generate polynomial features systematically using combinations_with_replacement

- Create sequence patterns and categorical combinations without manual index management

- Sample large datasets efficiently with islice to avoid memory waste

- Eliminate feature engineering bugs with mathematically precise combinatorial functions

Setup

Before we dive into the examples, let’s set up the sample dataset.

import pandas as pd

from itertools import (

combinations,

combinations_with_replacement,

product,

islice,

permutations,

)

import numpy as np

# Create simple sample dataset

np.random.seed(42)

data = {

"age": np.random.randint(20, 65, 20),

"income": np.random.randint(30000, 120000, 20),

"experience": np.random.randint(0, 40, 20),

"education_years": np.random.randint(12, 20, 20),

}

df = pd.DataFrame(data)

numerical_features = ["age", "income", "experience", "education_years"]

print(df.head())

| age | income | experience | education_years |

|---|---|---|---|

| 58 | 94925 | 2 | 15 |

| 48 | 97969 | 36 | 13 |

| 34 | 35311 | 6 | 19 |

| 62 | 113104 | 20 | 15 |

| 27 | 83707 | 8 | 13 |

Feature Interactions: From Nested Loops to Combinations

Custom Code

Creating feature interactions manually requires careful index management to avoid duplicates and self-interactions. While possible, this approach becomes error-prone and complex as the number of features grows.

# Manual approach - proper nested loops with index management

df_manual = df.copy()

for i in range(len(numerical_features)):

for j in range(i + 1, len(numerical_features)):

feature1, feature2 = numerical_features[i], numerical_features[j]

interaction_name = f"{feature1}_x_{feature2}"

df_manual[interaction_name] = df_manual[feature1] * df_manual[feature2]

print(f"First few: {list(df_manual.columns[4:7])}")

First few: ['age_x_income', 'age_x_experience', 'age_x_education_years']

With Itertools

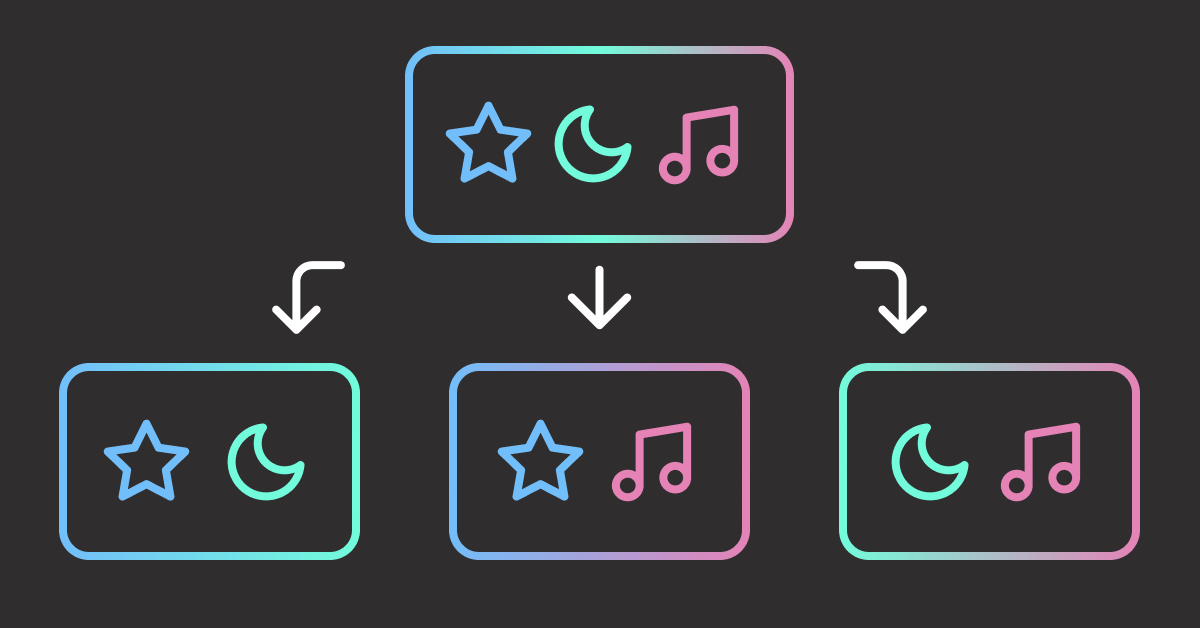

Use combinations() to generate unique pairs from a list without repetition or order dependency.

For example, combinations(['A','B','C'], 2) yields (A,B), (A,C), (B,C).

![combinations(['A','B','C'], 2)](https://codecut.ai/wp-content/uploads/2025/07/diagram-export-7-26-2025-10_41_00-AM.png)

Let’s apply this to feature interactions:

# Automated approach with itertools.combinations

df_itertools = df.copy()

for feature1, feature2 in combinations(numerical_features, 2):

interaction_name = f"{feature1}_x_{feature2}"

df_itertools[interaction_name] = df_itertools[feature1] * df_itertools[feature2]

print(f"First few: {list(df_itertools.columns[4:7])}")

First few: ['age_x_income', 'age_x_experience', 'age_x_education_years']

📚 For comprehensive production practices in data science, check out Production-Ready Data Science.

Polynomial Features: From Manual Assignments to Automated Generation

Custom Code

Creating polynomial features manually requires separate handling of squared terms and interaction terms, involving complex logic to generate all degree-2 polynomial combinations.

df_manual_poly = df.copy()

# Create squared features

for feature in numerical_features:

df_manual_poly[f"{feature}_squared"] = df_manual_poly[feature] ** 2

# Create interaction features with list slicing

for i, feature1 in enumerate(numerical_features):

for feature2 in numerical_features[i + 1:]:

df_manual_poly[f"{feature1}_x_{feature2}"] = df_manual_poly[feature1] * df_manual_poly[feature2]

# Show polynomial features created

polynomial_features = list(df_manual_poly.columns[4:])

print(f"First few polynomial features: {polynomial_features[:6]}")

First few polynomial features: ['age_squared', 'income_squared', 'experience_squared', 'education_years_squared', 'age_x_income', 'age_x_experience']

With Itertools

Use combinations_with_replacement() to generate combinations where items can repeat.

For example, combinations_with_replacement(['A','B','C'], 2) yields (A,A), (A,B), (A,C), (B,B), (B,C), (C,C).

![combinations_with_replacement(['A','B','C'], 2)](https://codecut.ai/wp-content/uploads/2025/07/diagram-export-7-26-2025-10_41_18-AM.png)

With this method, we can eliminate separate logic for squared terms and interaction terms in polynomial feature generation.

# Automated approach with combinations_with_replacement

df_poly = df.copy()

# Create features using the same combinations logic

for feature1, feature2 in combinations_with_replacement(numerical_features, 2):

if feature1 == feature2:

# Squared feature

df_poly[f"{feature1}_squared"] = df_poly[feature1] ** 2

else:

# Interaction feature

df_poly[f"{feature1}_x_{feature2}"] = df_poly[feature1] * df_poly[feature2]

# Show polynomial features created

polynomial_features = list(df_poly.columns[4:])

print(f"First few polynomial features: {polynomial_features[:6]}")

First few polynomial features: ['age_squared', 'age_x_income', 'age_x_experience', 'age_x_education_years', 'income_squared', 'income_x_experience']

Sequence Patterns: From Manual Tracking to Permutations

Custom Code

Creating features from ordered sequences requires manual permutation logic with nested loops. This becomes complex and error-prone as sequences grow larger.

# Manual approach - implementing permutation logic

actions = ['login', 'browse', 'purchase']

sequence_patterns = []

# Manual permutation generation for 3 items

for i in range(len(actions)):

for j in range(len(actions)):

if i != j: # Ensure different first and second

for k in range(len(actions)):

if k != i and k != j: # Ensure all different

pattern = f"{actions[i]}_{actions[j]}_{actions[k]}"

sequence_patterns.append(pattern)

print(f"First few: {sequence_patterns[:3]}")

First few: ['login_browse_purchase', 'login_purchase_browse', 'browse_login_purchase']

With Itertools

Use permutations() to generate all possible orderings where sequence matters.

For example, permutations(['A','B','C']) yields (A,B,C), (A,C,B), (B,A,C), (B,C,A), (C,A,B), (C,B,A).

![permutations(['A','B','C'])](https://codecut.ai/wp-content/uploads/2025/07/diagram-export-7-26-2025-10_41_09-AM.png)

Let’s apply this to user behavior sequences:

# Automated approach with itertools.permutations

actions = ['login', 'browse', 'purchase']

# Generate all sequence permutations

sequence_patterns = ['_'.join(perm) for perm in permutations(actions)]

print(f"Permutations created: {len(sequence_patterns)} patterns")

print(f"First few: {sequence_patterns[:3]}")

Permutations created: 6 patterns

First few: ['login_browse_purchase', 'login_purchase_browse', 'browse_login_purchase']

Cartesian Products: From Nested Loops to Product

Custom Code

Creating combinations between multiple categorical variables requires nested loops for each variable. The code complexity grows exponentially as more variables are added.

# Manual approach - nested loops for categorical combinations

education_levels = ['bachelor', 'master', 'phd']

locations = ['urban', 'suburban', 'rural']

age_groups = ['young', 'middle', 'senior']

categorical_combinations = []

for edu in education_levels:

for loc in locations:

for age in age_groups:

combination = f"{edu}_{loc}_{age}"

categorical_combinations.append(combination)

print(f"Manual nested loops created {len(categorical_combinations)} combinations")

print(f"First few: {categorical_combinations[:3]}")

Manual nested loops created 27 combinations

First few: ['bachelor_urban_young', 'bachelor_urban_middle', 'bachelor_urban_senior']

With Itertools

Use product() to generate all possible combinations across multiple lists.

For example, product(['A','B'], ['1','2']) yields (A,1), (A,2), (B,1), (B,2).

![product(['A','B'], ['1','2'])](https://codecut.ai/wp-content/uploads/2025/07/diagram-export-7-26-2025-10_41_13-AM.png)

Let’s apply this to categorical features:

# Automated approach with itertools.product

education_levels = ['bachelor', 'master', 'phd']

locations = ['urban', 'suburban', 'rural']

age_groups = ['young', 'middle', 'senior']

# Generate all combinations

combinations_list = list(product(education_levels, locations, age_groups))

categorical_features = [f"{edu}_{loc}_{age}" for edu, loc, age in combinations_list]

print(f"Product created {len(categorical_features)} combinations")

print(f"First few: {categorical_features[:3]}")

Product created 27 combinations

First few: ['bachelor_urban_young', 'bachelor_urban_middle', 'bachelor_urban_senior']

Efficient Sampling: From Full Data Loading to Islice

Custom Code

Sampling data for prototyping typically requires loading the entire dataset into memory first. This wastes memory and time when you only need a small subset for initial feature exploration.

import sys

# Load entire dataset into memory

large_dataset = list(range(1_000_000))

# Calculate memory usage

dataset_mb = sys.getsizeof(large_dataset) / 1024 / 1024

# Sample only what we need

sample_data = large_dataset[:10]

# Print results

print(f"Loaded dataset: {len(large_dataset)} items ({dataset_mb:.1f} MB)")

print(f"Sample data: {sample_data}")

Loaded dataset: 1000000 items (7.6 MB)

Sample data: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

With Itertools

Use islice() to extract a slice from an iterator without loading the full dataset.

For example, islice(large_data, 5, 10) yields items 5-9.

![islice([0,1,2,3,4,5,6,7,8,9], 3)](https://codecut.ai/wp-content/uploads/2025/07/diagram-export-7-26-2025-10_45_21-AM.png)

Let’s apply this to dataset sampling:

# Process only what you need

sample_data = list(islice(large_dataset, 10))

# Calculate memory usage

sample_kb = sys.getsizeof(sample_data) / 1024

# Print results

print(f"Processed dataset: {len(sample_data)} items ({sample_kb:.2f} KB)")

print(f"Sample data: {sample_data}")

Processed dataset: 10 items (0.18 KB)

Sample data: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

For even greater memory efficiency with large-scale feature engineering, consider Polars vs. Pandas: A Fast, Multi-Core Alternative for DataFrames.

Final Thoughts

Manual feature engineering requires complex index management and becomes error-prone as feature sets grow. These five itertools methods provide cleaner alternatives that express mathematical intent clearly:

combinations()generates feature interactions without nested loopscombinations_with_replacement()creates polynomial features systematicallypermutations()creates sequence-based features from ordered dataproduct()builds categorical feature crosses efficientlyislice()samples large datasets without memory waste

Master combinations() and combinations_with_replacement() first – they solve the most common feature engineering challenges. The other three methods handle specialized tasks that become essential as your workflows grow more sophisticated.

For scaling feature engineering beyond memory limits with SQL-based approaches, explore A Deep Dive into DuckDB for Data Scientists.

📚 Want to go deeper? Learning new techniques is the easy part. Knowing how to structure, test, and deploy them is what separates side projects from real work. My book shows you how to build data science projects that actually make it to production. Get the book →

Stay Current with CodeCut

Actionable Python tips, curated for busy data pros. Skim in under 2 minutes, three times a week.

2 thoughts on “5 Essential Itertools for Data Science”

Excellent and clear presentation of concepts with exa presentation, very useful even for longtime C++ programmers likeme!,

Thank you so much! I am glad to hear that.