🤝 COLLABORATION

Learn ML Engineering for Free on ML Zoomcamp

Learn ML engineering for free on ML Zoomcamp and receive a certificate! Join online for practical, hands-on experience with the tech stack and workflows used in production ML. The next cohort of the course starts on September 15, 2025. Here’s what you’ll learn:

Core foundations:

- Python ecosystem: Jupyter, NumPy, Pandas, Matplotlib, Seaborn

- ML frameworks: Scikit-learn, TensorFlow, Keras

Applied projects:

- Supervised learning with CRISP-DM framework

- Classification/regression with evaluation metrics

- Advanced models: decision trees, ensembles, neural nets, CNNs

Production deployment:

- APIs and containers: Flask, Docker, Kubernetes

- Cloud solutions: AWS Lambda, TensorFlow Serving/Lite

📅 Today’s Picks

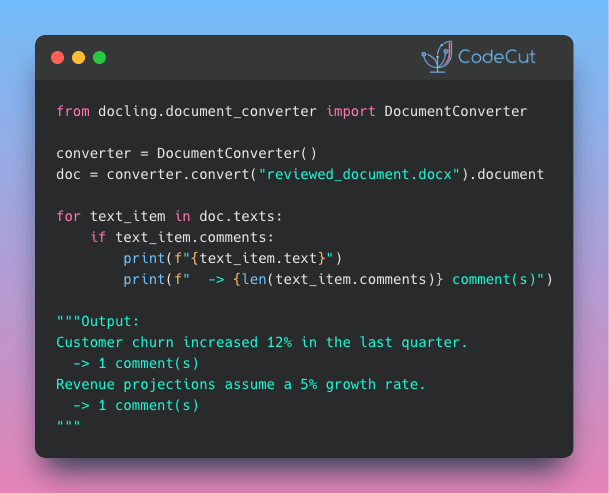

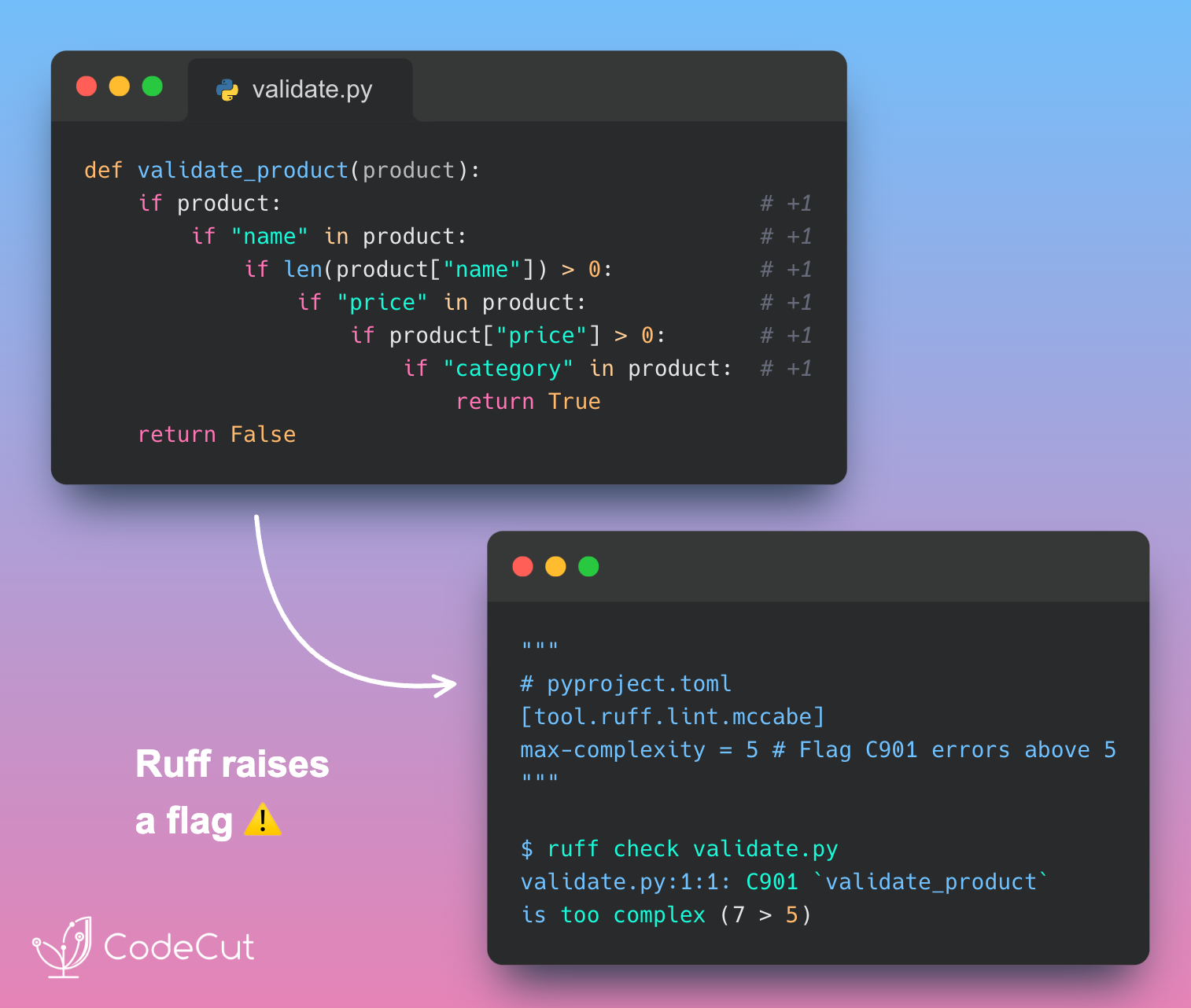

Ruff: Stop AI Code Complexity Before It Hits Production

Problem

AI agents often create overengineered code with multiple nested if/else and try/except blocks, increasing technical debt and making functions difficult to test.

However, it is time-consuming to check each function manually.

Solution

Ruff’s C901 complexity check automatically flags overly complex functions before they enter your codebase.

This tool counts decision points (if/else, loops) that create multiple execution paths in your code.

Key benefits:

- Automatic detection of complex functions during development

- Configurable complexity thresholds for your team standards

- Integration with pre-commit hooks for automated validation

- Clear error messages showing exact complexity scores

No more manual code reviews to catch overengineered functions.

Build Debuggable Tests: One Assertion Per Function

Problem

Tests with multiple assertions make debugging harder.

When a test fails, you can’t tell which assertion broke without examining the code.

Solution

Create multiple specific test functions for different scenarios of the same function.

Follow these practices for focused test functions:

- One assertion per test function for clear failure points

- Use descriptive test names that explain the expected behavior

- Maintain consistent naming patterns across your test suite

This approach makes your test suite more maintainable and failures easier to diagnose.

Stay Current with CodeCut

Actionable Python tips, curated for busy data pros. Skim in under 2 minutes, three times a week.