📅 Today’s Picks

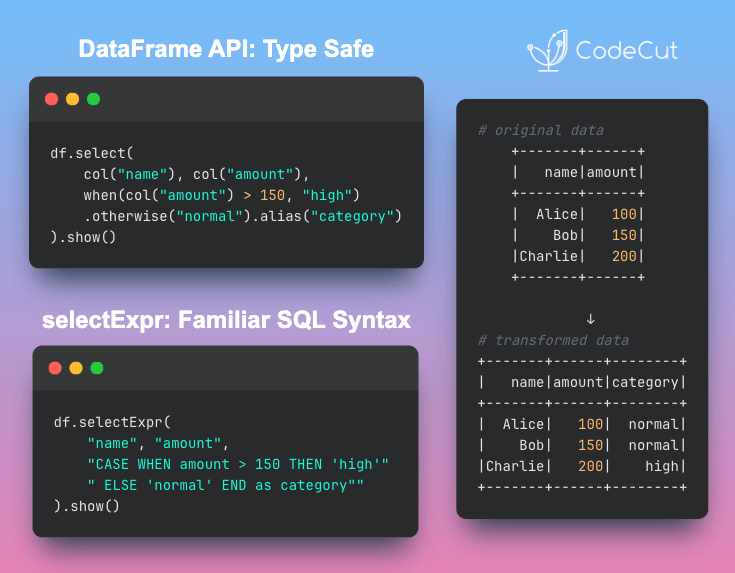

PySpark Transformations: Python API vs SQL Expressions

Problem

PySpark offers two ways to handle SQL transformations. How do you know which one to use?

Solution

Choose based on your development style and team expertise.

Use the DataFrame API if you’re comfortable with Python and need Python-native development with type safety and autocomplete support.

Use selectExpr() if you’re comfortable with SQL and need familiar SQL patterns and simplified CASE statements.

Both methods deliver the same performance, so pick the approach that fits your workflow.

☕️ Weekly Finds

dotenvx [Python Utils] – A secure dotenv with encryption, syncing, and zero-knowledge key sharing to make .env files secure and team-friendly

databases [Data Processing] – Async database support for Python with support for PostgreSQL, MySQL, and SQLite

pomegranate [ML] – Fast and flexible probabilistic modeling in Python implemented in PyTorch

Looking for a specific tool? Explore 70+ Python tools →

Stay Current with CodeCut

Actionable Python tips, curated for busy data pros. Skim in under 2 minutes, three times a week.