Motivation

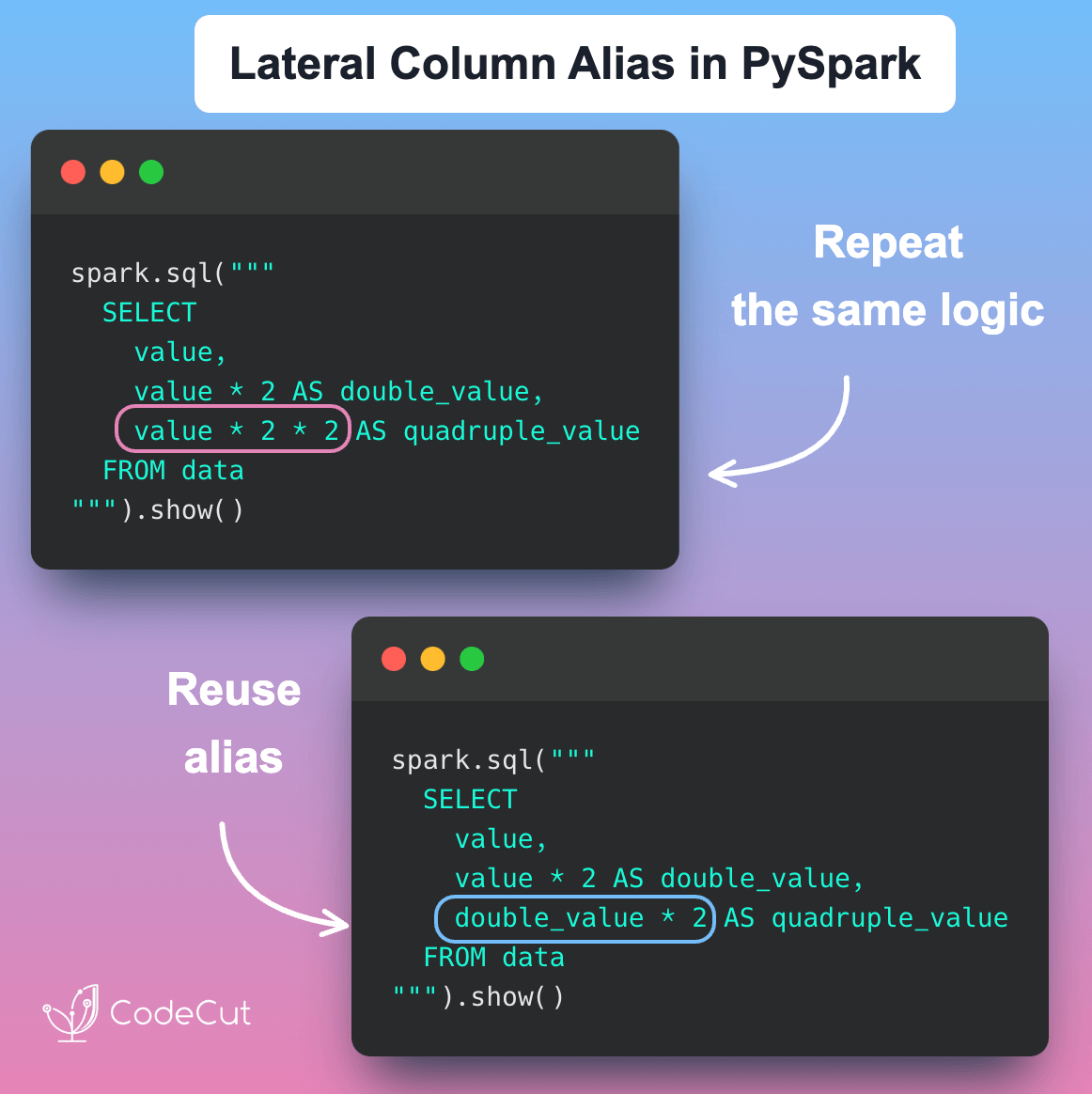

When computing new columns in PySpark, it’s common to repeat the same logic multiple times in SQL expressions.

For example, if you’re doubling a column and then doubling it again, you end up repeating value * 2 in both steps:

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

df = spark.createDataFrame([(10,), (20,), (30,)], ["value"])

df.createOrReplaceTempView("data")

# Without lateral column aliasing

spark.sql("""

SELECT

value,

value * 2 AS double_value,

value * 2 * 2 AS quadruple_value

FROM data

""").show()

Output:

+-----+------------+---------------+

|value|double_value|quadruple_value|

+-----+------------+---------------+

| 10| 20| 40|

| 20| 40| 80|

| 30| 60| 120|

+-----+------------+---------------+

This duplication can make your logic harder to maintain and more error-prone.

Introduction to PySpark

PySpark is the Python API for Apache Spark, a powerful distributed computing system used for big data processing and analytics. You can install it using:

pip install pysparkA previous feature, Parameterized SQL queries, is covered in this article.

If you’re interested in other recent enhancements to PySpark, check out:

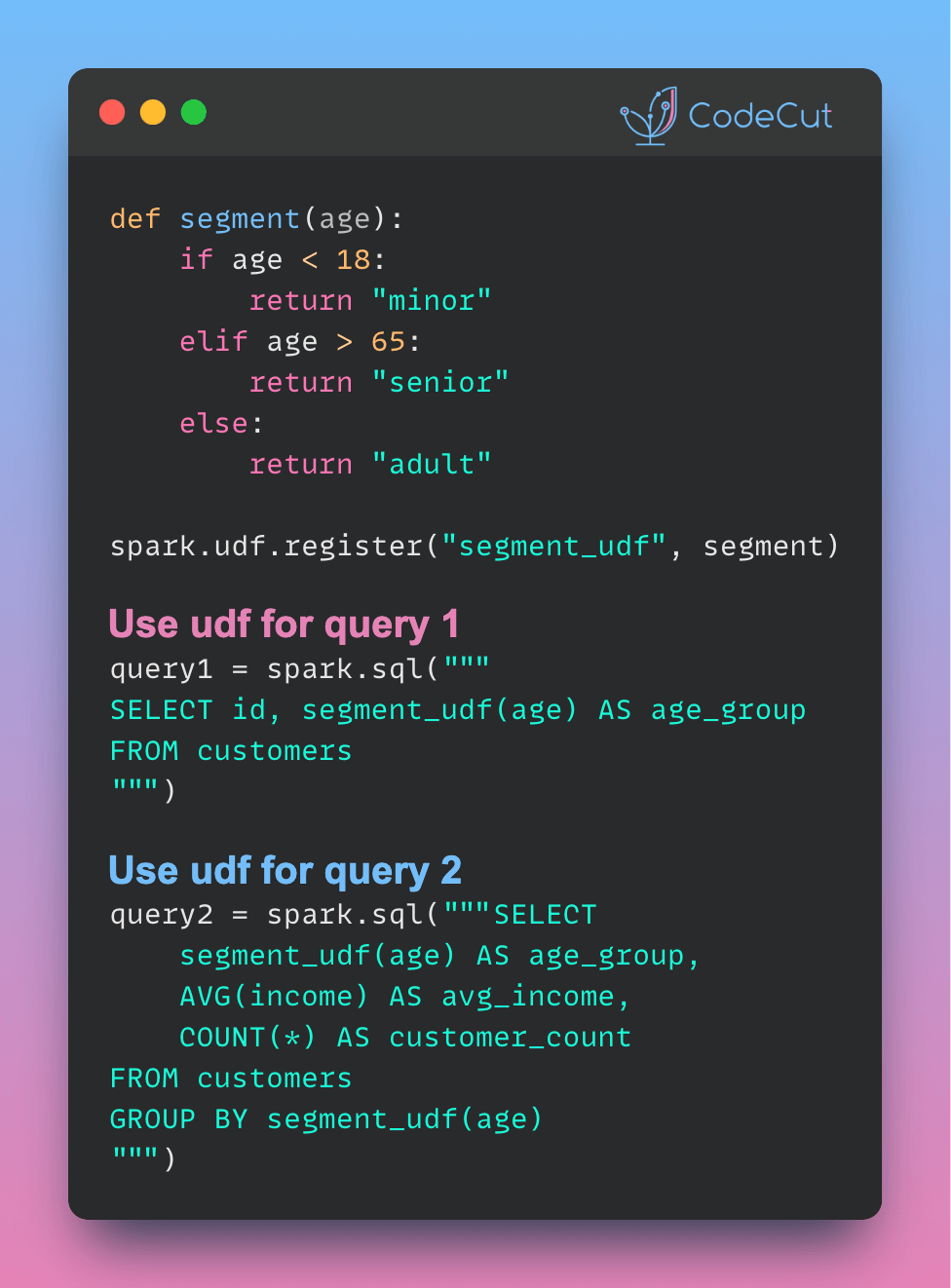

- Leverage Spark UDFs for reusable complex logic in SQL queries

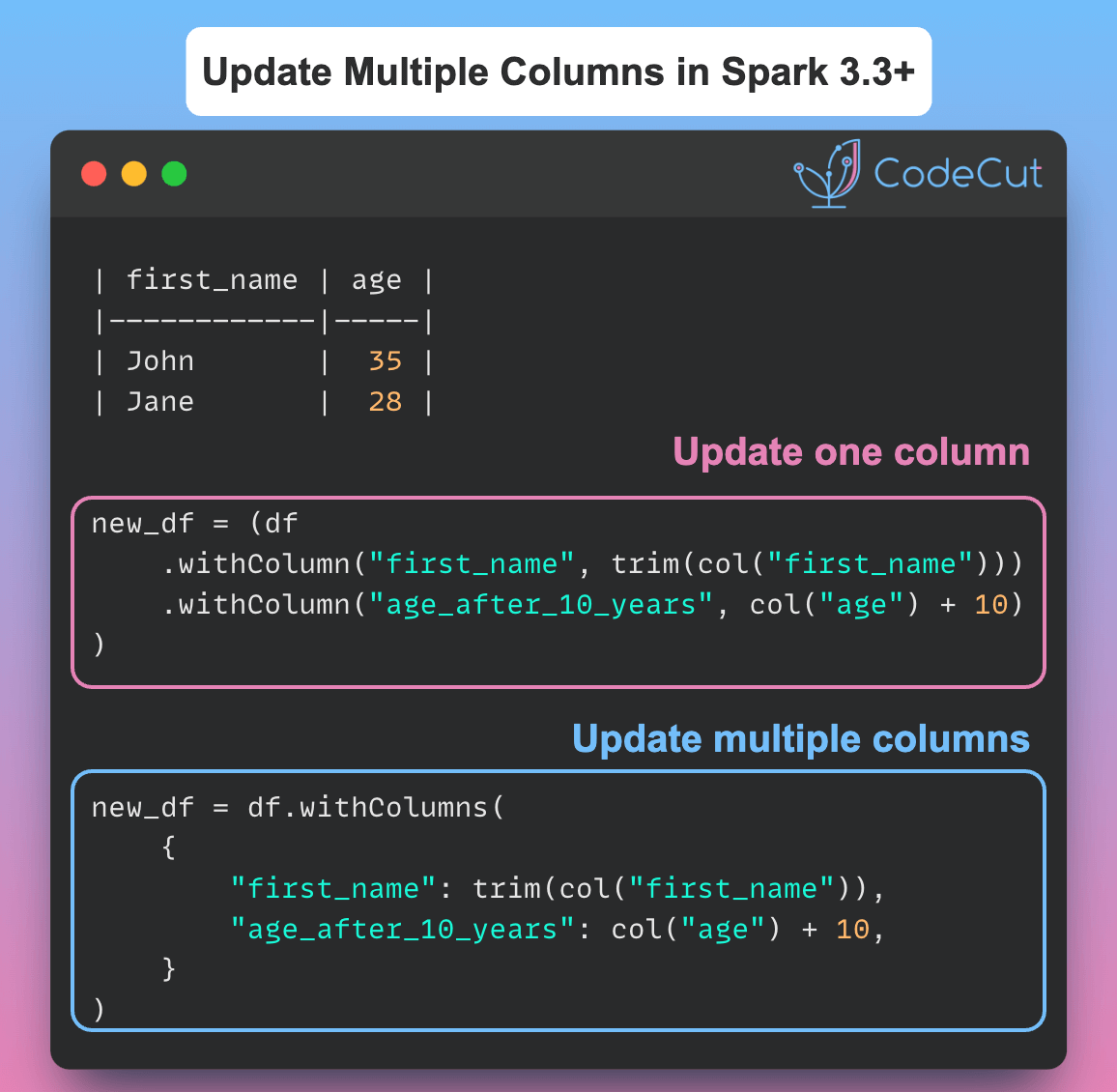

- Update multiple columns in Spark 3.3 and later

In this post, we will cover Lateral Column Alias References, introduced in PySpark version 3.4.0.

Lateral Column Alias References

PySpark now supports lateral column aliasing—this means you can reuse a previously defined alias in the same SELECT statement. Instead of duplicating logic, you define it once and refer to it later:

df.createOrReplaceTempView("data")

spark.sql("""

SELECT

value,

value * 2 AS double_value,

double_value * 2 AS quadruple_value

FROM data

""").show()

Output:

+-----+------------+---------------+

|value|double_value|quadruple_value|

+-----+------------+---------------+

| 10| 20| 40|

| 20| 40| 80|

| 30| 60| 120|

+-----+------------+---------------+

The output shows that double_value is computed once and reused for quadruple_value, keeping the logic concise.

Conclusion

Lateral column aliasing in PySpark allows you to simplify column computations by reusing aliases within the same SELECT clause. It’s a small but powerful addition that makes your SQL queries cleaner and more maintainable.