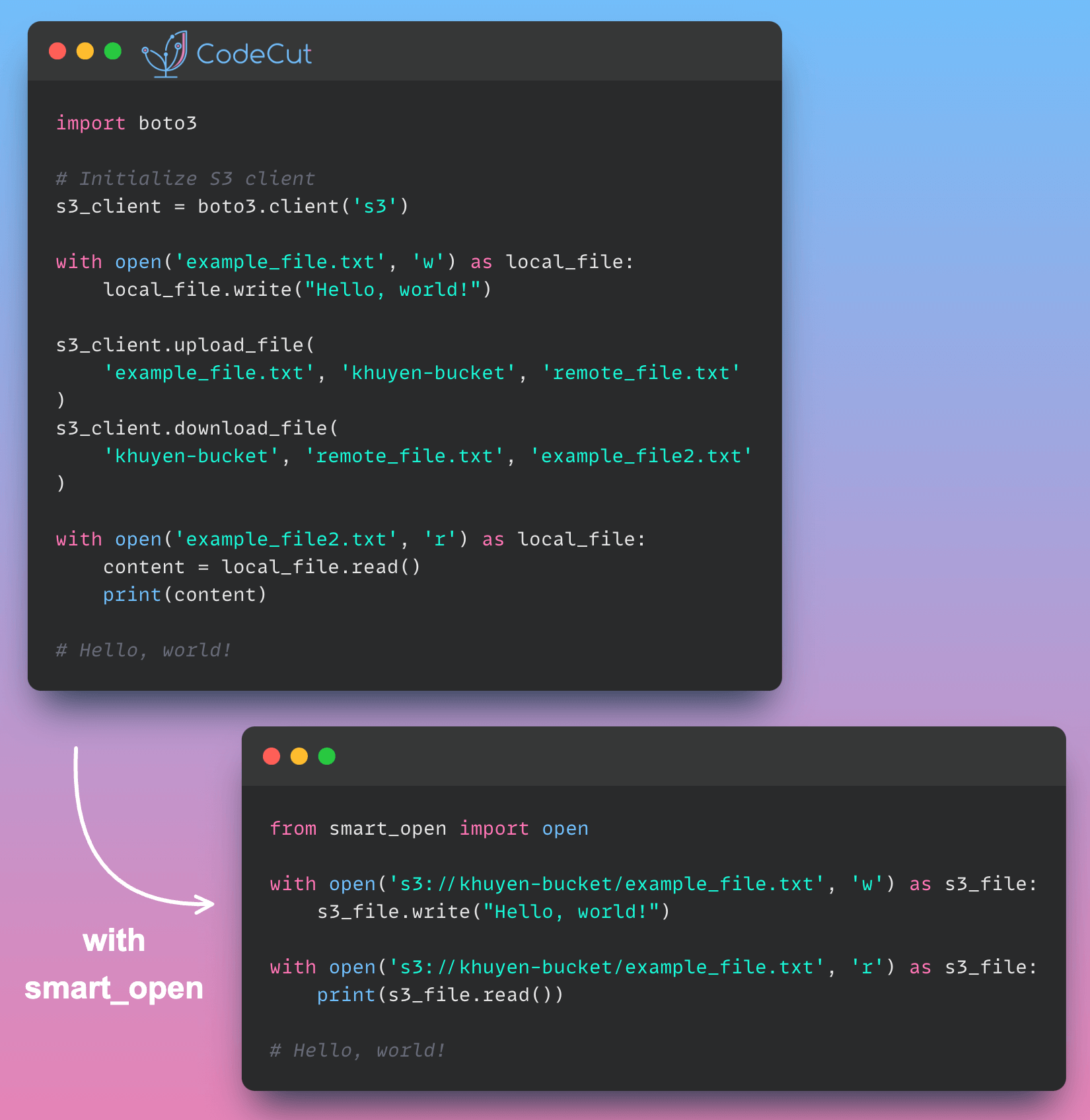

Working with large remote files in cloud storage services like Amazon S3 can be challenging, often requiring complex code and careful object management.

import boto3

# Initialize S3 client

s3_client = boto3.client('s3')

with open('example_file.txt', 'w') as local_file:

local_file.write("Hello, world!")

s3_client.upload_file('example_file.txt', 'khuyen-bucket', 'remote_file.txt')

s3_client.download_file('khuyen-bucket', 'remote_file.txt', 'example_file2.txt')

with open('example_file2.txt', 'r') as local_file:

content = local_file.read()

print(content)

# Hello, world!smart_open addresses these issues by providing a single open() function that works across different storage systems and file formats.

from smart_open import open

with open('s3://khuyen-bucket/example_file.txt', 'w') as s3_file:

s3_file.write("Hello, world!")

with open('s3://khuyen-bucket/example_file.txt', 'r') as s3_file:

print(s3_file.read())

# Hello, world!Notice how similar this is to working with local files. The open() function from smart_open handles all the complexities of S3 operations behind the scenes.

Another great feature of smart_open is its ability to handle compressed files transparently. Let’s say we have a gzipped file that we want to upload to S3 and then read from:

# Uploading a gzipped file

with open('example_file.txt.gz', 'r') as local_file:

with open('s3://my-bucket/example_file.txt.gz', 'w') as s3_file:

s3_file.write(local_file.read())

# Reading a gzipped file from S3

with open('s3://my-bucket/example_file.txt.gz', 'r') as s3_file:

content = s3_file.read()

print(content)

# Hello, world!smart_open automatically handles the decompression when reading the gzipped file, making it seamless to work with compressed files in S3.

Other examples of URLs that smart_open accepts:

s3://my_bucket/my_key

s3://my_key:my_secret@my_bucket/my_key

s3://my_key:my_secret@my_server:my_port@my_bucket/my_key

gs://my_bucket/my_blob

azure://my_bucket/my_blob

hdfs:///path/file

hdfs://path/file

webhdfs://host:port/path/file

./local/path/file

~/local/path/file

local/path/file

./local/path/file.gz

file:///home/user/file

file:///home/user/file.bz2

[ssh|scp|sftp]://username@host//path/file

[ssh|scp|sftp]://username@host/path/file

[ssh|scp|sftp]://username:password@host/path/fileIf you frequently work with remote files or cloud storage services, smart_open is definitely a tool worth adding to your Python toolkit. It can save you time, reduce complexity, and make your code more maintainable.