Motivation

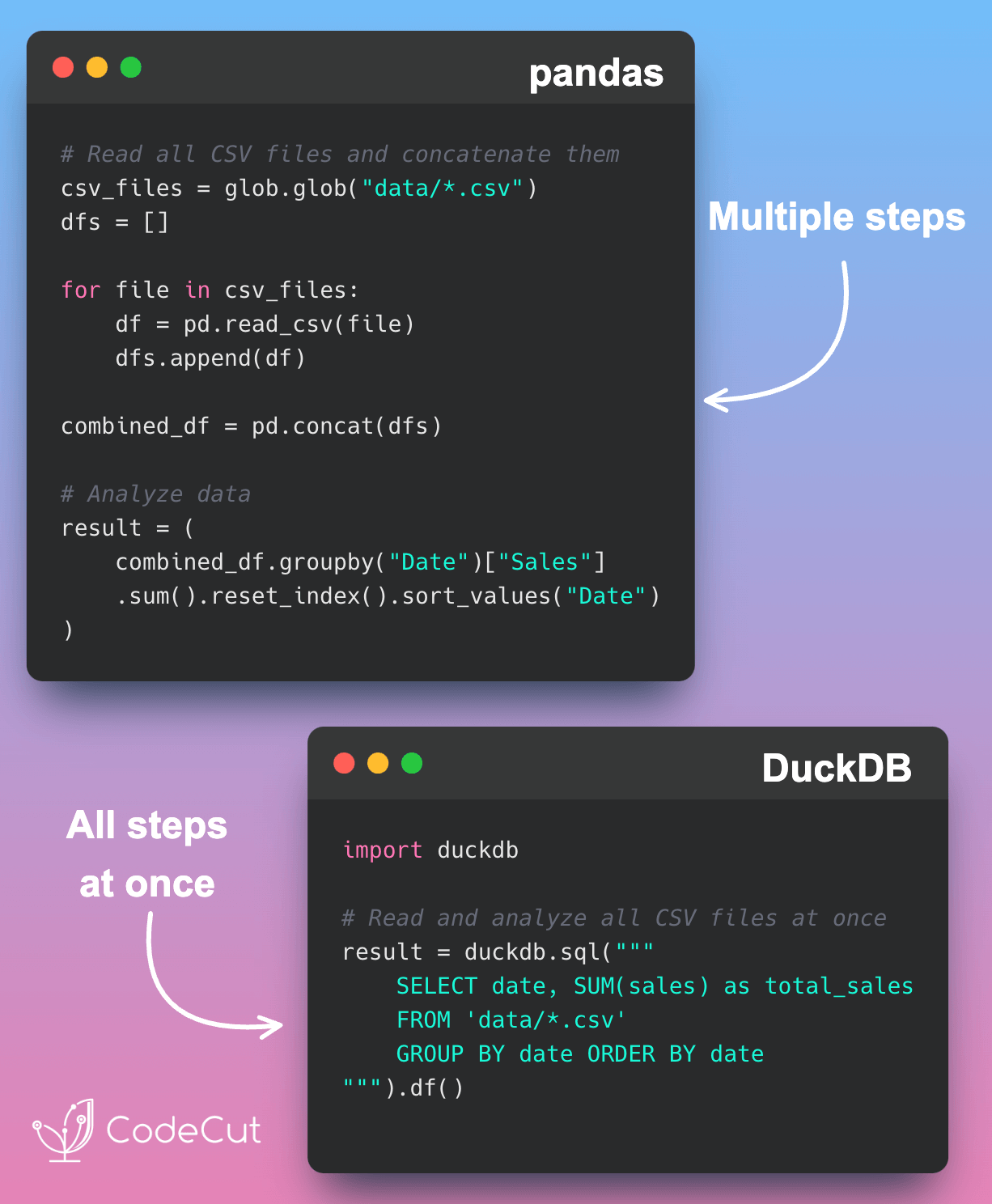

Data analysts frequently need to aggregate data across multiple CSV files for reporting and analysis. Traditional approaches require complex file handling and multiple data processing steps, which can impact productivity and code maintainability.

Here’s a typical approach that illustrates the complexity:

import glob

import pandas as pd

# Read all CSV files and concatenate them

csv_files = glob.glob("data/*.csv")

dfs = []

for file in csv_files:

df = pd.read_csv(file)

dfs.append(df)

# Concatenate all dataframes

combined_df = pd.concat(dfs, ignore_index=True)

# Perform grouping and aggregation

result = (

combined_df.groupby("Date")["Sales"]

.sum()

.reset_index()

.rename(columns={"Sales": "total_sales"})

.sort_values("Date")

)

resultOutput:

Date total_sales

0 2023-01-01 1200

1 2023-01-02 800

2 2023-01-03 600

3 2023-02-01 1500

4 2023-02-02 400

5 2023-02-03 50This approach requires multiple steps and explicit handling of data structures.

Introduction to DuckDB

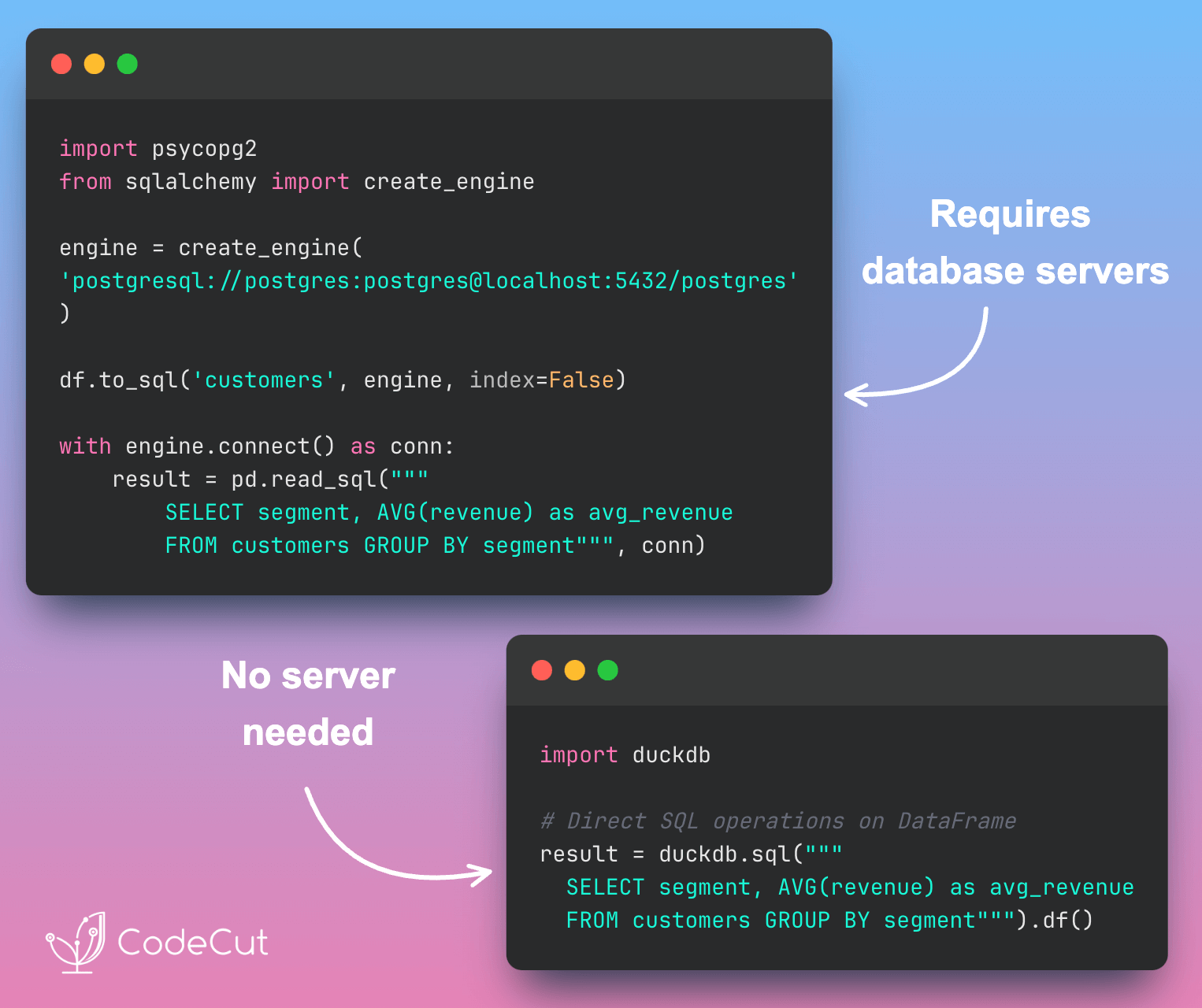

DuckDB is an analytics-focused database system that runs directly in your application process. It excels at handling analytical queries and provides seamless integration with Python data tools.

Installation is straightforward:

pip install duckdb

As covered in our article about SQL operations on DataFrames, DuckDB provides efficient SQL functionality for data analysis. We’ve also previously discussed CSV data management with DuckDB.

Pattern-Based CSV Processing

DuckDB simplifies multiple CSV file processing through SQL pattern matching. Here’s how to use it:

First, let’s create sample data:

from pathlib import Path

import pandas as pd

# Create sample data

df1 = pd.DataFrame({

"date": ["2023-01-01", "2023-01-02"],

"sales": [100, 200]

})

df2 = pd.DataFrame({

"date": ["2023-01-03", "2023-01-04"],

"sales": [300, 400]

})

# Save files

Path("data").mkdir(exist_ok=True)

df1.to_csv("data/sales_1.csv", index=False)

df2.to_csv("data/sales_2.csv", index=False)

Process all CSV files with a single SQL query:

import duckdb

# Read and analyze all CSV files at once

result = duckdb.sql("""

SELECT

date,

SUM(sales) as total_sales

FROM 'data/*.csv'

GROUP BY date

ORDER BY date

""").df()

Output:

Date total_sales

0 2023-01-01 1200

1 2023-01-02 800

2 2023-01-03 600

3 2023-02-01 1500

4 2023-02-02 400

5 2023-02-03 50In this code, DuckDB:

- Uses SQL pattern matching to find relevant files

- Automatically combines matching files

- Processes data efficiently in a single pass

- Maintains type consistency across files

Conclusion

DuckDB’s pattern-based CSV processing significantly simplifies working with multiple files, making it an excellent choice for data professionals who need to analyze data across multiple CSV files efficiently.